Cats2D Multiphysics > Overview > Developments

Developments

If you want a more scientific focus, go to my research topics page. If you want to see art, go to my art gallery. If you are unfamiliar with Cats2D, learn about it at the Cats2D overview page. If you want to learn a few things about Goodwin, keep reading this page.

All unpublished results shown here are Copyright © 2016–2020 Andrew Yeckel, all rights reserved

I'm glad you asked

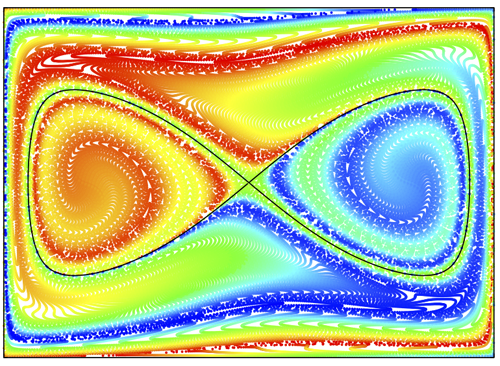

Why, yes, that is a Cats2D simulation featured on the

cover of this distinguished math journal from the European

Mathematical Society.

Inside the front cover it says: "The cover picture shows a simulation by Andrew Yeckel of a Kármán vortex street, inspired by Sadatoshi Taneda's well-known experimental photograph of the phenomenon."

I like that this journal focuses on nonlinear analysis, which

happens to be a particular strength of Cats2D.

Disturbing the waters

I've added a semi-stochastic model of Brownian motion to

particle path integration in Cats2D. The model assumes that

random fluctuations are superposed on the deterministic

velocity of the computed flow. In a fully stochastic model,

particle velocities are updated on the time scale of thermal

fluctuations to satisfy a Boltzmann probability distribution.

These fluctuations occur on a time scale much shorter than any

time scale of interest to continuum transport, which makes it

feasible to replace stochastic behavior at small time scales

by deterministic statistical behavior. The semi-stochastic

model uses the solution to the diffusion equation as a

probability distribution to update particle velocities at

every time step of path integration. Some mathematical

details are found here.

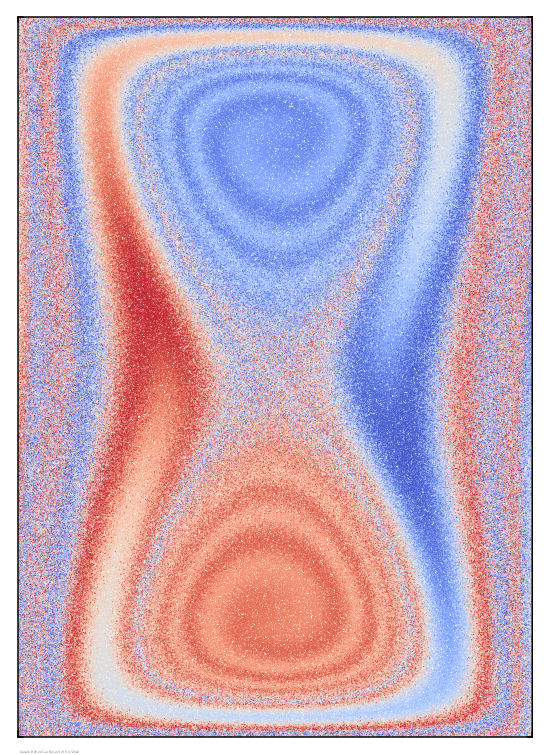

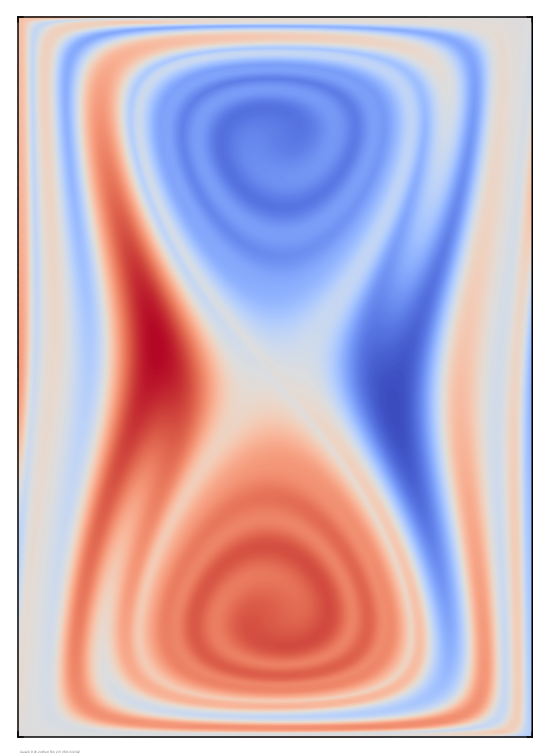

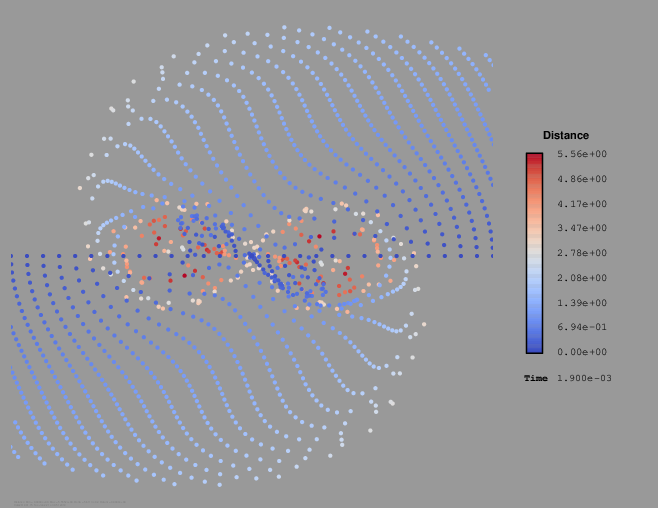

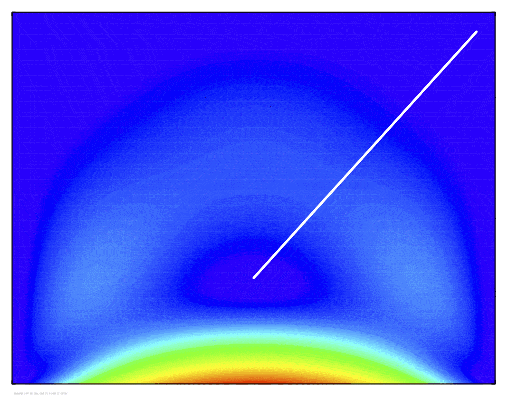

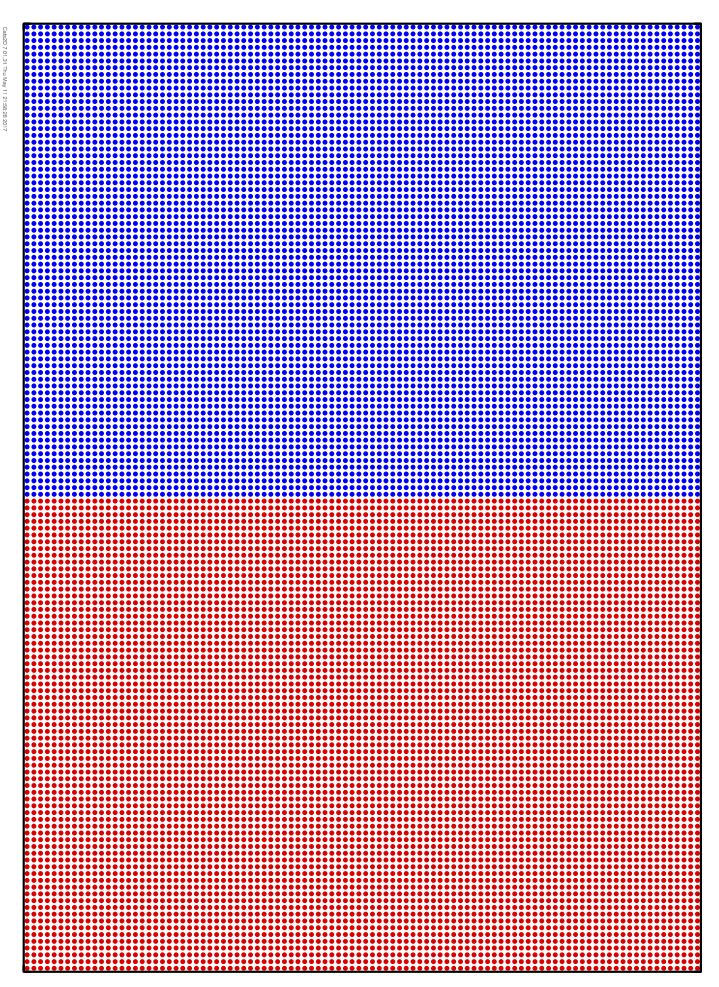

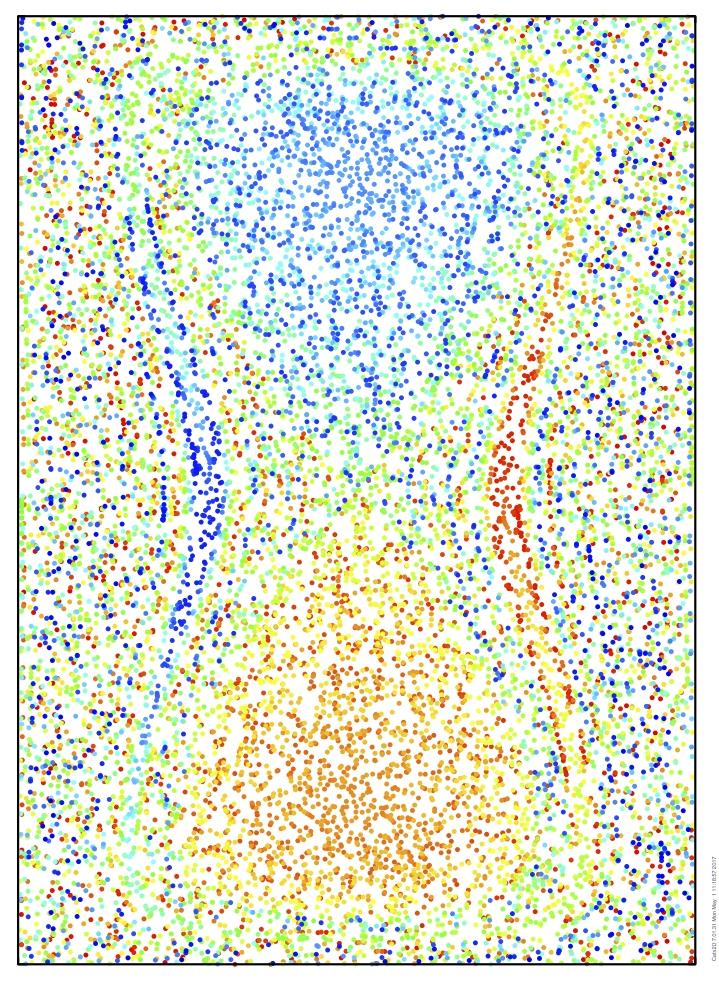

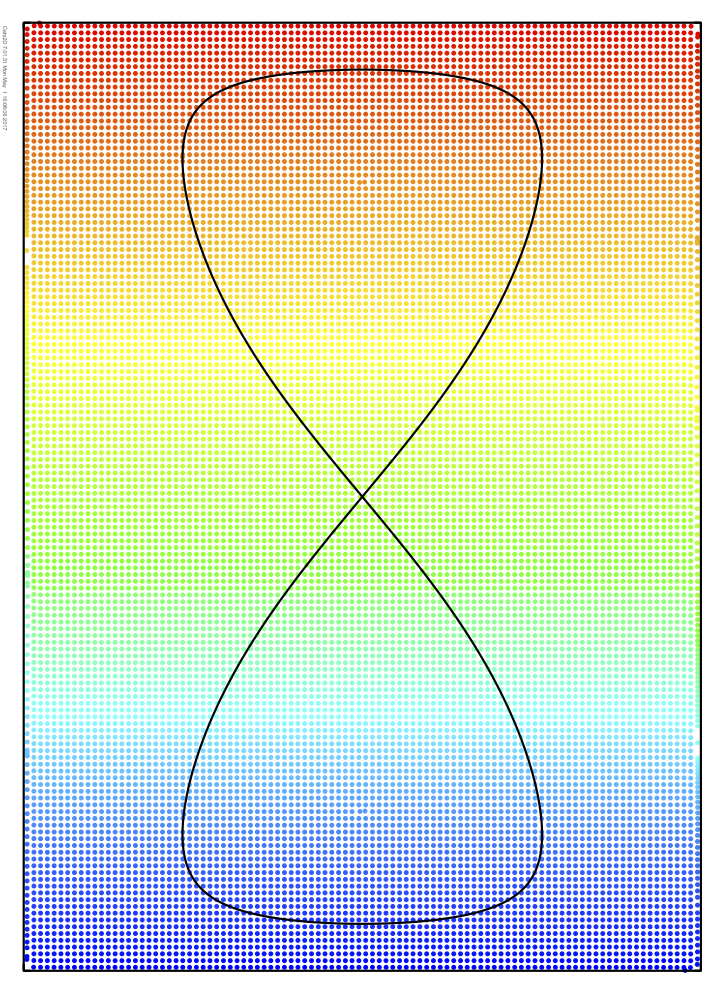

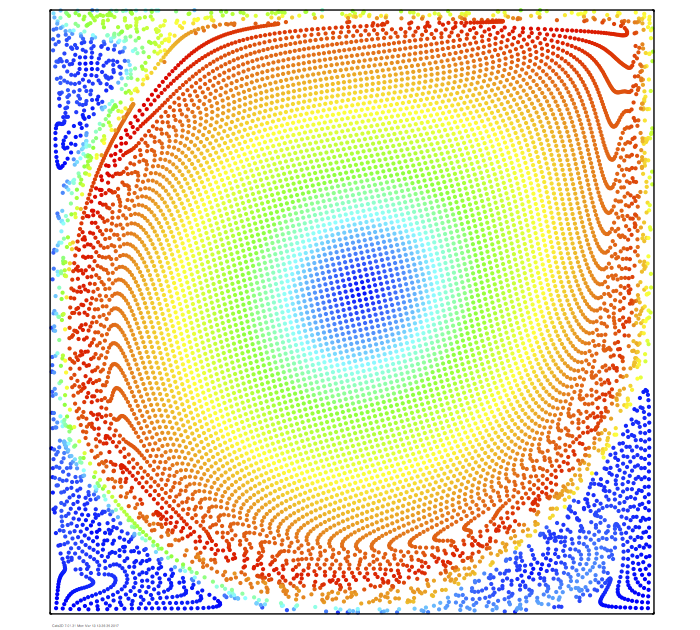

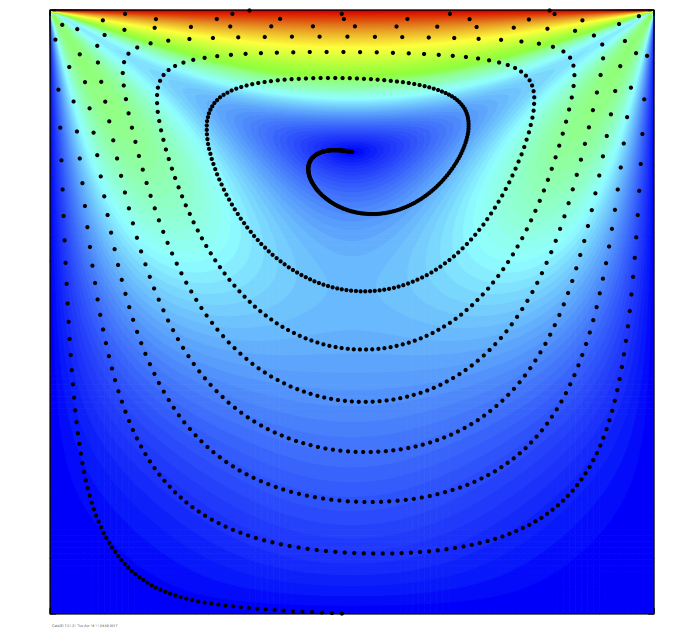

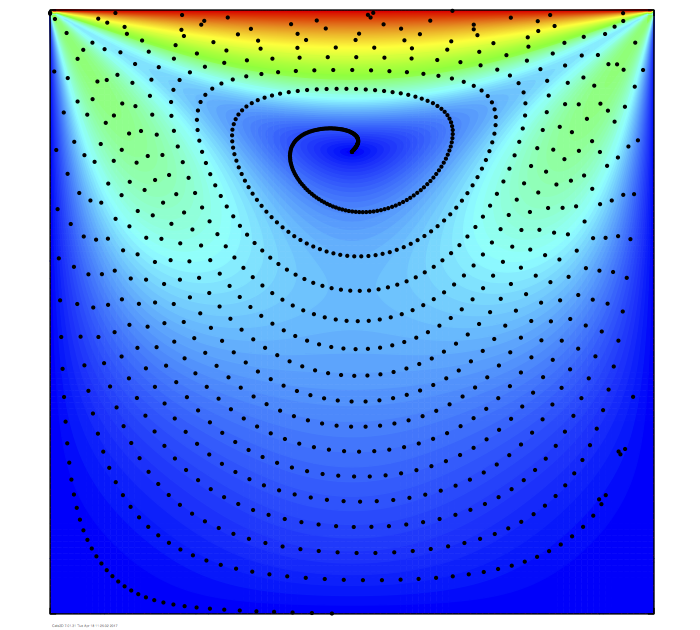

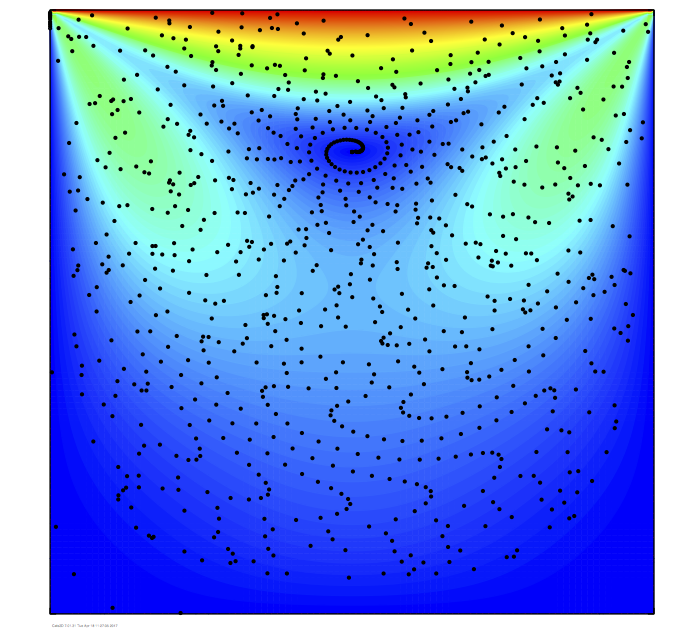

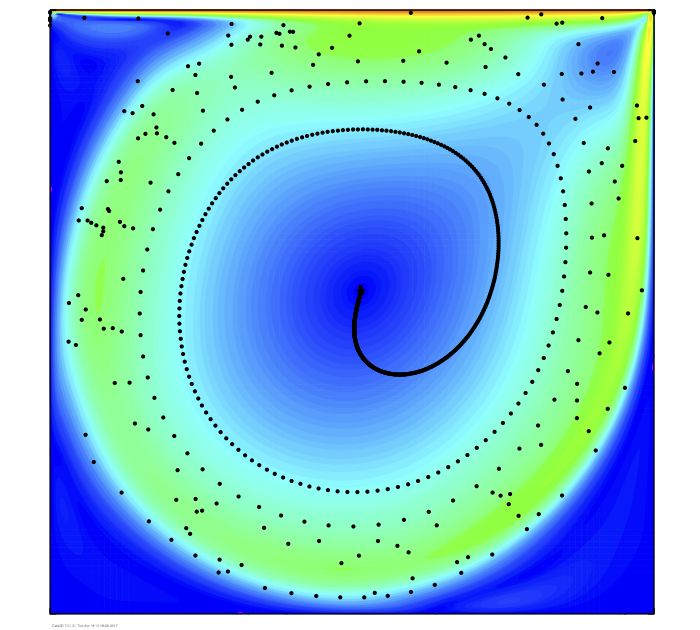

I've added Brownian motion to the particles in some driven

cavity flows to see how it affects convective mixing. I've

also solved the convective-diffusion equation under matching

conditions. These images compare mixing of a stratified liquid

at Peclet = 100,000 computed by each approach:

The visual comparison is favorable, but particle statistical

data is noisy despite the large number of particles used here.

A more detailed investigation of the method is found here.

I talk to the wind

Recently I've been studying machine learning and how it is

used in different fields. This is the first time I have looked

at any details. Having done so, I think this is the

worst named scientific field ever. Machine learning doesn't

even exist. There is no learning. Whoever wrote this opening

sentence to the Wiki on machine learning doesn't understand

what the word algorithm means:

"Machine learning (ML) is the study of computer algorithms

that can improve automatically through experience and by the

use of data."

Seriously, machine learning is nothing more than model

selection and data fitting. The computer does not rewrite the

algorithm, it merely executes the algorithm with improved

model selection and/or better data. Automating this does not

imbue the computer with a brain. Every single application of

machine learning I've studied fits this description, including

neural nets. We don't know exactly what a neural net is doing

under the hood, but I guarantee it isn't thinking about

anything. We contrive a model based on a collection of nodes

with values, and edges with weights, then apply some rules for

manipulating them to improve the model's performance on a

training objective. At its heart, I see little to distinguish

this from traditional methods of model reduction and

optimization. The only intelligence I see is that of the

humans who created the algorithm and implemented it.

Now that I've gotten my little rant out of the way, I invite you to read a short article I've written about recent research in quantifying art paintings using statistical measures borrowed from information science. In it I critique a recent paper that attempts to characterize painted artworks by pixel-based quantitative measures inspired by information theory. I use my own artworks and photographs, as well as those of the masters, to show why pixel-based approaches are fatally flawed for this type of analysis.

Third Stone From The Sun

Three Lamb-Oseen vortexes are released into the wild.

From the new Triskelion series.

Intellectual Potemkin Village

I've written an essay about my personal experiences developing software in an academic research environment over the past 35 years. In it I take a sharp edged view of the attitudes held by my supervisors and other colleagues towards computing in research and education.

My latest curio

This is the first time I have posted anything here in over a year. I have been concentrating on my artwork, not only creating it with Cats2D but also bringing it to physical form in various media. I have accumulated a selection of prints on paper, stretched canvas, and aluminum, about 65 pieces so far. The aluminum prints, made by the Chromaluxe process, are gorgeous. I have turned some of these into clocks and thermometers.

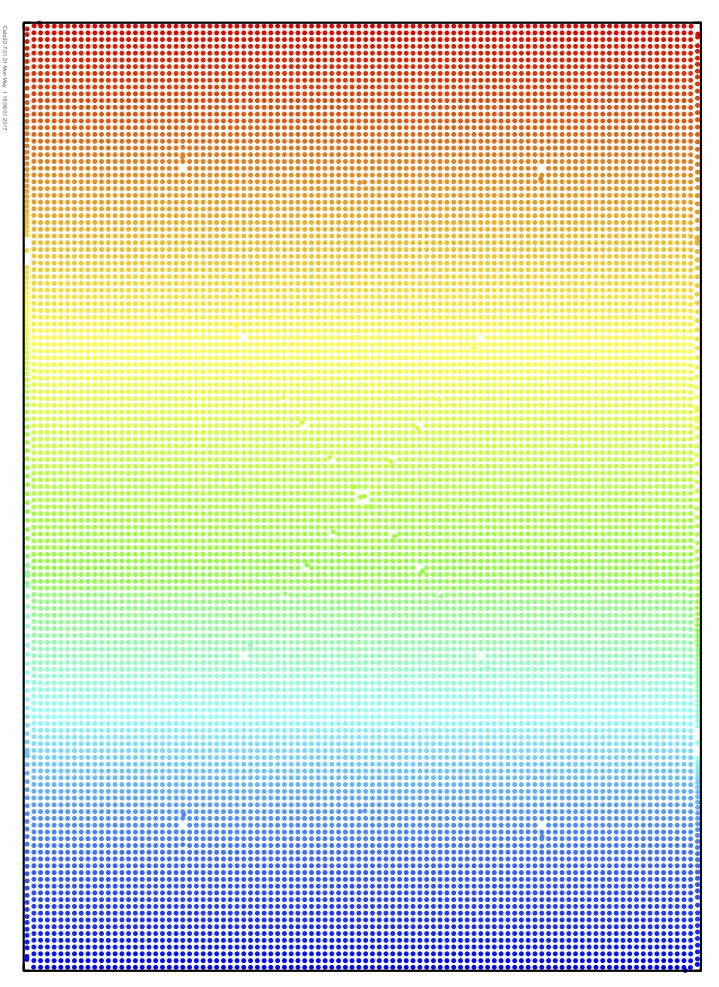

I have also been learning more about color theory, particularly how it relates to human perception. I have added a CIELAB-based color scale to Cats2D, used to make the picture shown above. Working in L*a*b* coordinates is more intuitive than the HSV model (hue, saturation and value) used by Cats2D. Notably, Moreland's popular warm-cold scale was designed in the CIELAB color space.

House of the Rising Sun

People wonder how I spend my time.

Cats2D, all day long.

Kwisatz Haderach

Read my latest work in which I harness the unique tools of Cats2D to analyze flow past a cylinder like you've never seen it before.

In this work I validate Cats2D against experimental visualizations and other data from numerous published sources. I study the stability and dynamic behavior of the flow, and I analyze the structure of the flow to better understand the nature of vortex shedding. The work features many tools of analysis and visualization found in Cats2D that are applicable to any flow, which makes it interesting in ways not directly related to its subject matter.

Two sides of the same coin

Yes, they do look similar. Taneda's photograph of flow past a fence was published in 1979, the year I dropped out of high school (Goodwin, meanwhile, was on station in the Persian Gulf). My Cats2D simulation was computed just now, forty years later. What took so long!

Occasionally someone asks me about the reliability of Cats2D. This comes up as two very different questions:

How do you know the equations are correct?

How do you know the solutions are correct?

The first question is less common than the second. I am asked this question by biologists and chemists, not by engineers or physicists. They usually are not familiar with conservation principles and tend to assume we are formulating ad hoc equations on a problem-by-problem basis. The answer to their concern is that conservation equations solved by Cats2D are standard. The limitations of their applicability are generally known. The Navier-Stokes equations are nearly 200 years old now. They can be misformulated or misappplied, but there is no doubt about their essential correctness under all but the most extraordinary of circumstances.

The second question, which comes up often, cannot be dismissed nearly so easily as the first. Many things can go wrong solving the equations. The algorithms in CFD are complicated. Many parts of the code must work correctly on their own, and interact correctly during its execution. Third party libraries must be chosen carefully and used correctly. Input data must be correct and self-consistent. Output data must be processed accurately. The numerical methods must be sound. The only way to address this question is by prolific testing of the code throughout its lifetime on problems to which reliable answers are known. This is what we do at Cats2D.

Since we are not personally worried about the correctness of the equations themselves, we have mostly been content to validate Cats2D against known solutions to the same equations, exact and numerical, published by others. Simple examples of exact solutions include Couette-Poiseuille type flows and solid body rotation. The solution varies in one space dimension only in these flows, but the test can be strengthened by rotating the domain to an arbitrary degree with respect to the coordinate system. Similarity solutions, for example Von Kármán swirling flow or Homann stagnation-point flow, make good test problems. Stokes flow past a sphere and Hill's spherical vortex have particularly simple but non-trivial two-dimensional forms that are excellent for quantitatively validating derived quantities such as streamfunction and vorticity. Many exact solutions in heat and mass transfer can be found in classic textbooks.

Among numerical solutions, the lid-driven cavity (my favorite computational test bed ever) is exceedingly well documented. The flow has a non-trivial structure that depends strongly on Reynolds number. It is perhaps the most important test problem in CFD. Other well documented flows are Rayleigh-Bénard convection and Taylor-Couette flow, which are especially useful for validating stability and bifurcation analysis. We have also used more complicated and specialized problems to validate Cats2D, for example melt crystal growth, my area of research for twenty years, and liquid film flows, where both Goodwin and I have done substantial work in the past. Every time we add new physics to Cats2D, we test the code against known results. Nothing is done on blind faith.

Lately I have focused on validating Cats2D against physical experiments, including many flow visualizations found in Van Dyke's An Album of Fluid Motion. I hope that my new work on flow past a cylinder satisfies the most ardent skeptic that Cats2D can make accurate predictions of incompressible laminar flow from first principles.

A few entries below, I compare Cats2D simulations to experiments in thermal convection between concentric cylinders. More of these validations are on the way, and I learn something new every time I dip my toes in the water.

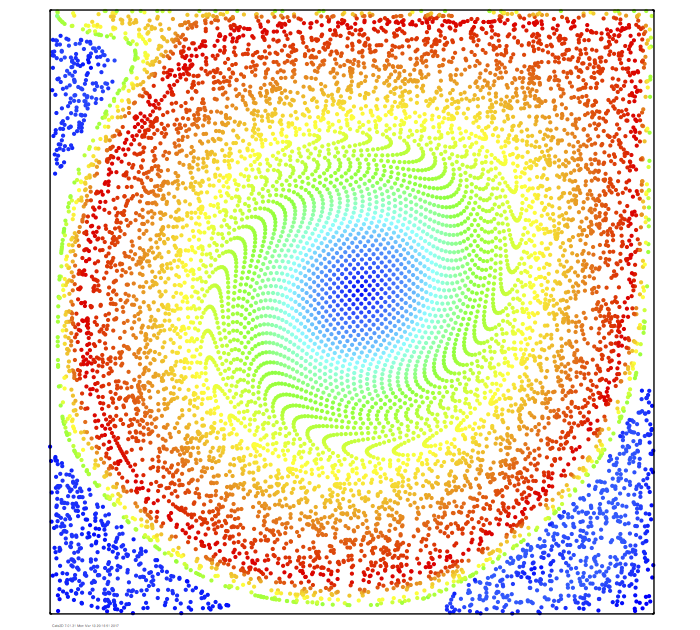

Atelier Cats2D

Where physics, mathematics, and computing are brought together to forge original works of art.

An interacting pair of Lamb-Oseen vortexes was used to compute this drawing for my new Fandango collection, inspired by the works of Georgia O'Keeffe.

CFD winter

Recently I read that the CFD industry grew at a 13% annualized rate between 1990 and 2010. I suspect that growth has remained tepid since then.

CFD is extremely hardware intensive. In 1990 it was mostly performed on supercomputers or engineering workstations, which were very expensive, and quite feeble by today's standards. The CFD industry was tiny. Nowadays a $1,000 laptop is way more powerful than a $20 million dollar supercomputer was back then. Sitting on top of this cheap abundant computing power, the industry should be shooting the lights out. In this context, its 13% growth rate is pitiful. A major software company would probably just ax the CFD division if it had one.

The CFD industry limped along for two decades, underwent a lot of consolidation, and continues to limp along. ANSYS, by far and away the largest supplier of CFD-related software, presently has annual revenue of around $1 billion (apparently much of which comes from services). Licenses are very expensive and have never come down in price, which seems unhealthy. Along the way, CFD education and research have declined at major universities. Work coming from the national laboratories is lukewarm at best. This all sounds like failure to me.

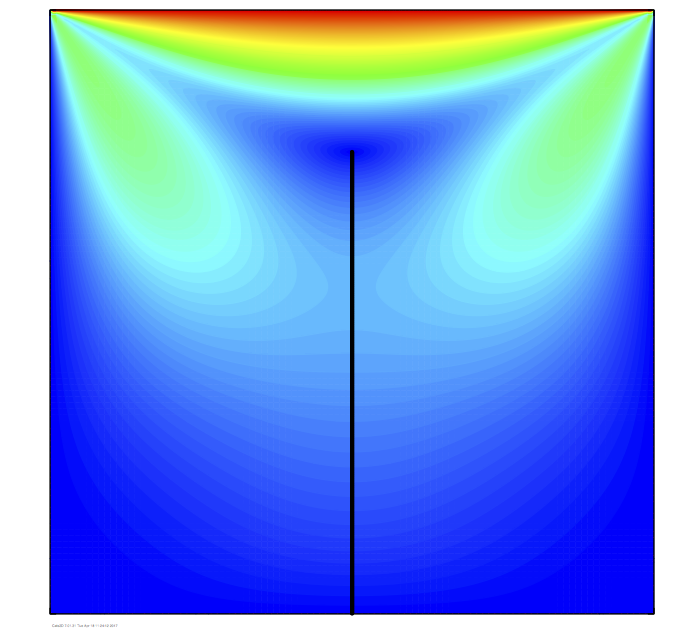

Smoke on the water

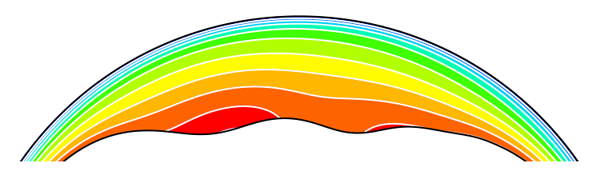

Grigull and Hauf published these photos in 1966, which are shown in figures 208 and 209 of Van Dyke's An Album of Fluid Motion. Steady thermal convection of air is caused by heating the inner cylinder to a uniform temperature above that maintained on the outer cylinder. I have based the Grashof number on radius of outer cylinder, not gap width as done by Van Dyke; my values are equivalent to those reported by him.

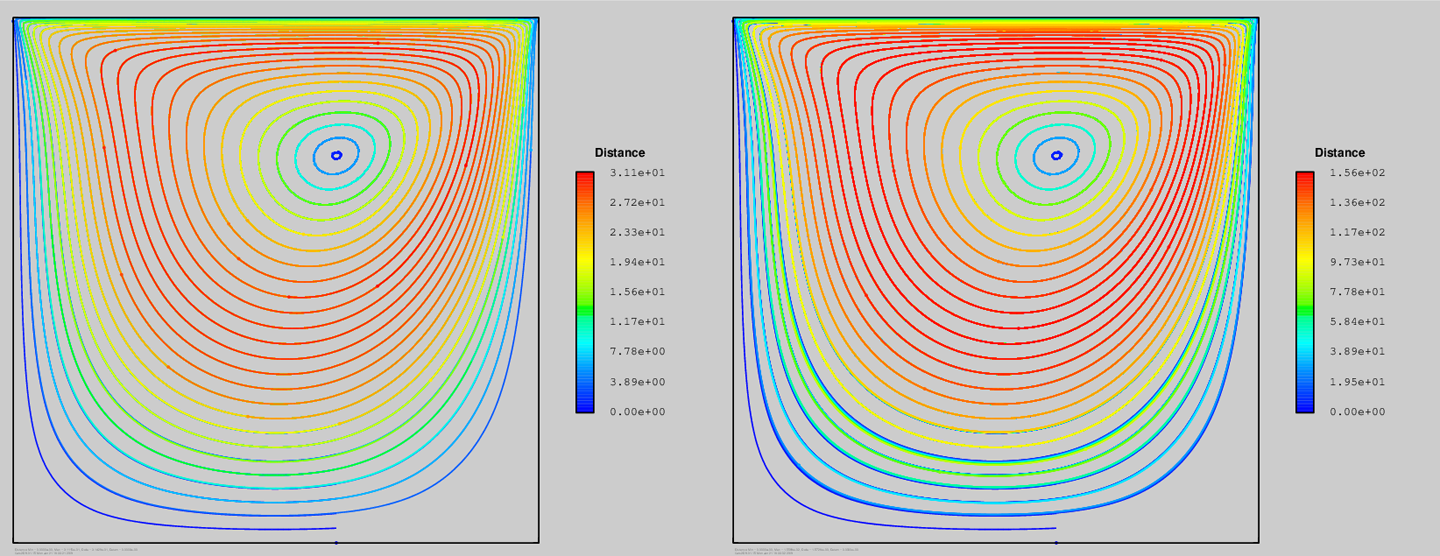

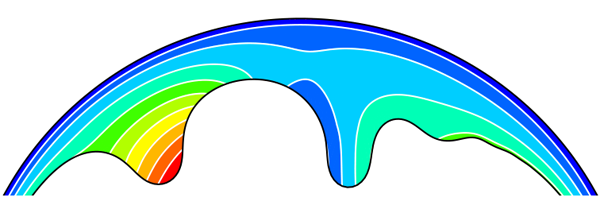

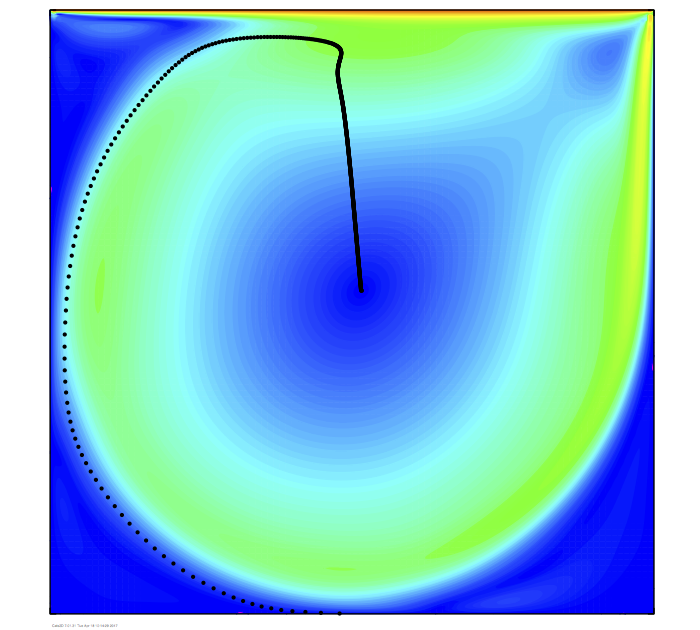

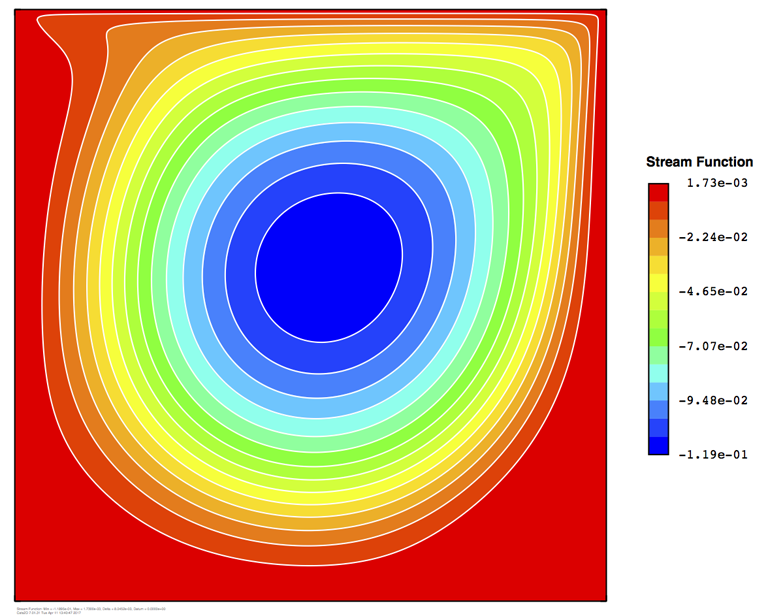

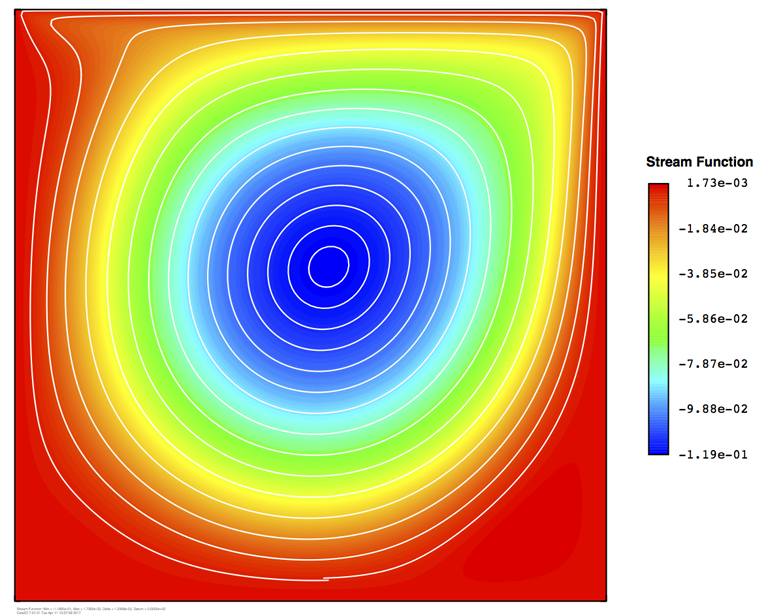

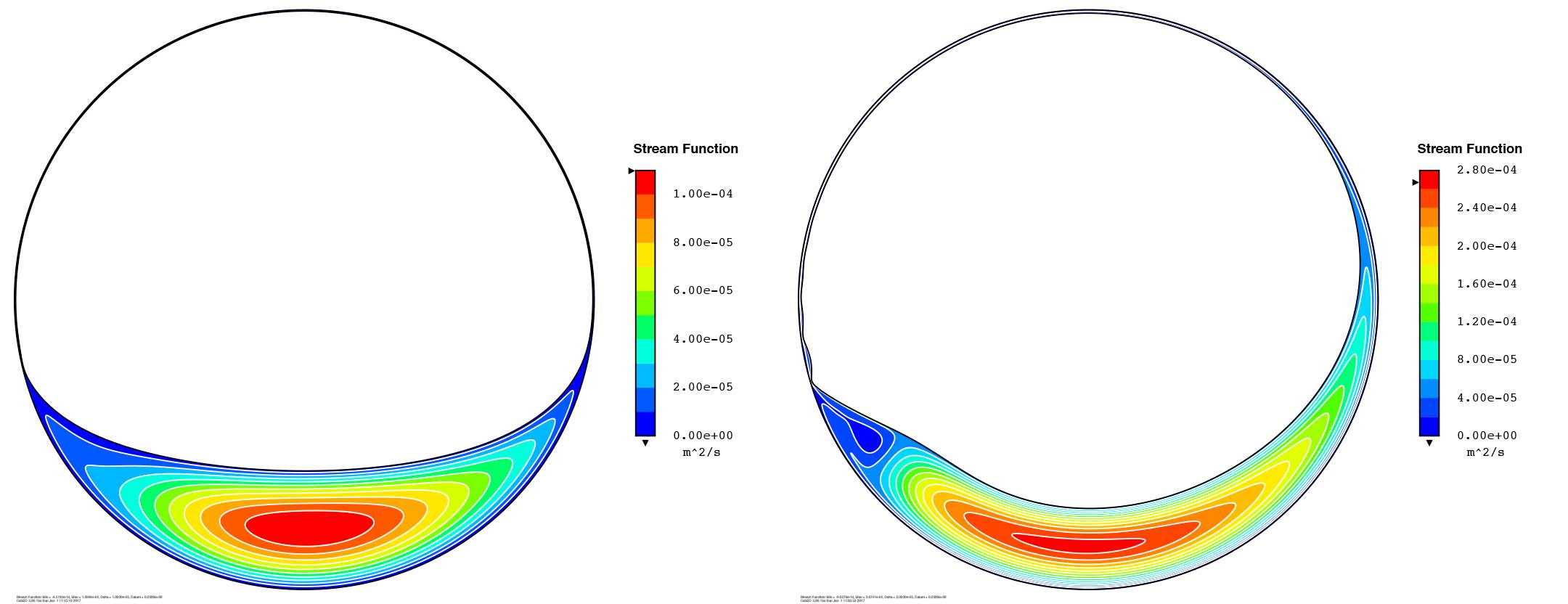

Pathlines visualized by smoke compared to streamlines computed by Cats2D (Grashof = 405,000):

Interferogram fringes compared to temperature contours computed by Cats2D (Grashof = 385,000):

Reproducing classic flow visualizations is turning into an addiction here at Cats2D.

Drop the leash

I left my job five years ago today. Everything at this web site has been created since then. Nobody pays me. Nobody tells me what to do. I can hardly overstate how beneficial this has been to the development of Cats2D. My previous environment at a research university was destructive towards progress in multiphysics simulation. Things are bad everywhere—I cannot think of any university laboratory or institute where good work is done any more in CFD. If I wanted to hire someone to help develop Cats2D, I wouldn't know where to look. The appropriate skills simply aren't taught in our universities.

Remember what the dormouse said

I feel like I am running across an open field when I work on flow visualization in Cats2D. It takes little effort to outdo the uninformative, visually unpleasant work that dominates the field. No aspect of CFD is more ignored than visualization, and I think I know why.

I've written before about the disengagement between intellectual leadership and application software development in physics-based simulation. One of the consequences is that faculty principal investigators continue to favor pencil and paper formalism over direct engagement in computing. Trainees are pressured to make the formalism work in a computer code. They are not particularly encouraged to pursue ideas that appear to be disconnected from the formalism, or that ignore the ostensible goals and preconceived notions of the research program. The faculty advisor is mostly absent from the project and does not share the experience.

But physics-based simulation is highly experiential. Weird stuff comes up all the time. The problems we solve, and the methods we use to solve them, are deeper than I think any of us understand. This is especially true of the incompressible Navier-Stokes equations, which are basically pathological. The numerical methods commonly employed to solve them also have important pathologies. I have found that many of my best ideas have been seeded by a Cats2D computing session in which I hopped from one stone to another for hours, my curiosity piqued by something odd and unexpected I had seen. A naive graduate student working for an out-of-touch advisor is unlikely to take that stroll.

Visualization is neglected because the desk jockeys in the physics-based computing community do not see any attractive formalism to it. To them it is purely technical computing, beneath their station. They spend very little of their time, if any at all, making visualizations, and they don't see it as an intellectually creative endeavor. Others can do that for them. The standards for visualization remain perpetually low.

These boots are made for walking

Here are some Cats2D validation studies from my recent article on flow past a cylinder in the laminar regime.

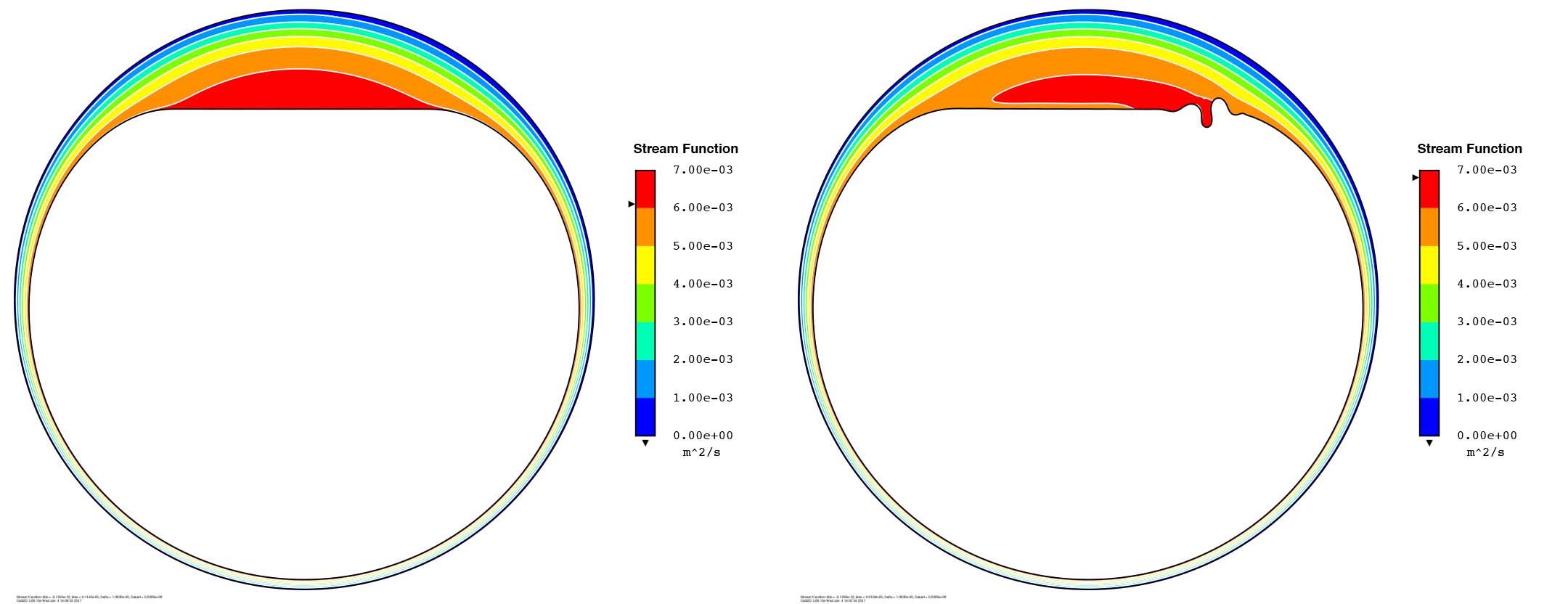

Impulsive start of a cylinder, Re = 1700, Ut/D = 1.92. The photograph by Honji and Taneda (1969) is from figure 61 in Van Dyke's An Album of Fluid Motion. I have enlarged the area where two small vortexes form upstream of the main vortex. Simulations by Cats2D agree very nicely with the photograph.

This next photograph, by M. Countanceau and R. Bouard, is from figure 59 in Van Dyke. The photograph views the cylinder surface at an oblique angle, which slightly distorts the comparison with the two-dimensional simulations, so I haven't fussed too much with the scale and alignment of the images. Re = 5000, Ut/D = 1.0.

Here is the same flow at a later time. Re = 5000, Ut/D = 3.0.

Cats2D does its job right. It isn't necessary to fiddle around with fussy settings, fudge the physical parameter values, or rely on sketchy numerical methods.

Sneak preview

A line is a dot that went for a walk — Paul Klee

Take a look at my latest art works, the vortex street collection.

Intellectual stasis

I quote from a 2011 white paper by a pair of CFD industry insiders:

"Sadly, new and fundamental research on CFD within universities seems to be limited these days. Commercial vendors seem to be still driving cutting-edge CFD research in-house, and fundamental research from governmental bodies (e.g. NASA, DoD, DoE, EU) tends to be either very application-specific or drying up."

That was written eight years ago. I'm afraid things have only gotten worse since then. Here are some of academia's leading practitioners in CFD research today:

We're nearing the end of the Paleozoic era for this field.

When the bottom drops out

I will never run out of things to do with Cats2D.

The velvet glove

It is impossible to verify the robustness, accuracy, efficiency, or any other meaningful characteristic of a CFD application by reading its source code. Any non-trivial CFD application is far too complicated for that. A code must be tested by verifying it against known solutions of high reliability. Careful monitoring of its output for consistency with conservation principles, boundary conditions, and input data is vitally necessary to detect errors and pathologies. There is no other way.

Reproducibility is an illusion. The environment for scientific computing changes constantly. It is inherently unstable. Especially if you insist on riding the wave of cutting edge technologies that will quickly change or may soon disappear (data parallel paradigms back then, GPU-based computing today, something else tomorrow). The best you can do is write everything yourself in C to run on ordinary hardware, based on minimal libraries that are permanent features of the landscape, e.g. BLAS. Maybe MPI, but CUDA, no way.

Reproducibility is especially problematic when you rely on a commercial code. Upward compatibility of your archived results is not guaranteed, and the code might go out of existence or become unaffordable to you in the future. You may never solve that problem again.

Replication is the best we can do. We can try to make it as easy as possible by carefully archiving code and input data, but conditions are certain to change until reproduction is no longer possible. In practice this often happens within a few years.

Many times I have resurrected an old problem using Cats2D. Take ACRT, for example. It has been 20 years since I first studied this problem. In 2000 and 2001 I published papers featuring ACRT simulations of considerable complexity. I can no longer reproduce these solutions in any meaningful sense. Cats2D has evolved too much since then. The linear equation solver is totally new, the time-integration algorithm has been reworked, the equations have been recast from dimensionless to dimensional form, the input data has been restructured in several ways, and the solution file format has changed.

For these and other reasons I don't bother to maintain careful archives for problems like this. If I want to solve this problem again, I simply replicate it, whatever it takes to do it. I've done this many times. My approach to this entire issue of reproducibility is to maintain my tools, my skills, and my state of knowledge instead of the data itself. I can replicate my solutions faster than most people can reproduce their solutions. And every time I do it, I am looking for things I might have missed before.

Which brings me full circle to my original point: reading the source code is no substitute for verification. It isn't even useful as an aid to verification. I vehemently disagree that it is necessary or desirable in all cases to provide source code to reviewers or readers in order to publish a paper. I think that only rarely would this be the case, and certainly not for a CFD code. No reviewer is ever going to dig into Cats2D deeply enough to reliably determine what it is doing, even if it was made available to them. It's 170,000 lines long spread across 240 source code files.

Curiously, proprietary codes such as Fluent would be excluded from published work altogether, despite a long history of testing by a large user base. I am inclined to trust Fluent more than a one-off code written by a post-doc that a couple of reviewers decided was probably okay. Fluent is proprietary for a very good reason: it has high commercial value. Licenses cost tens of thousands of dollars per year. Why? Because it took a lot of work to develop it. Cats2D took a lot of work to develop, too. How are people who create high value software supposed to get paid, according to the signatories of this manifesto?

Fugue state

Andrew Yeckel, Purveyor of Fine Solutions, Visualizations, Hallucinations, and other CFD curiosities

From my new Kaleidoscope collection in the art gallery.

Where it stops nobody knows

My latest visualization idea: ink balls. I pack a circle with a massive number of particles and release it into a flow. Roll over this image to watch an ink ball strike a cylinder in a flowing stream:

In this hyper accurate calculation, the Navier-Stokes equations are discretized with 557,000 unknowns and time-integrated in coordination with tracking 80,000 particles in the ink ball. Cats2D slices and dices this problem in about 20 minutes on my puny laptop, which includes rendering 200 frames for the animation.

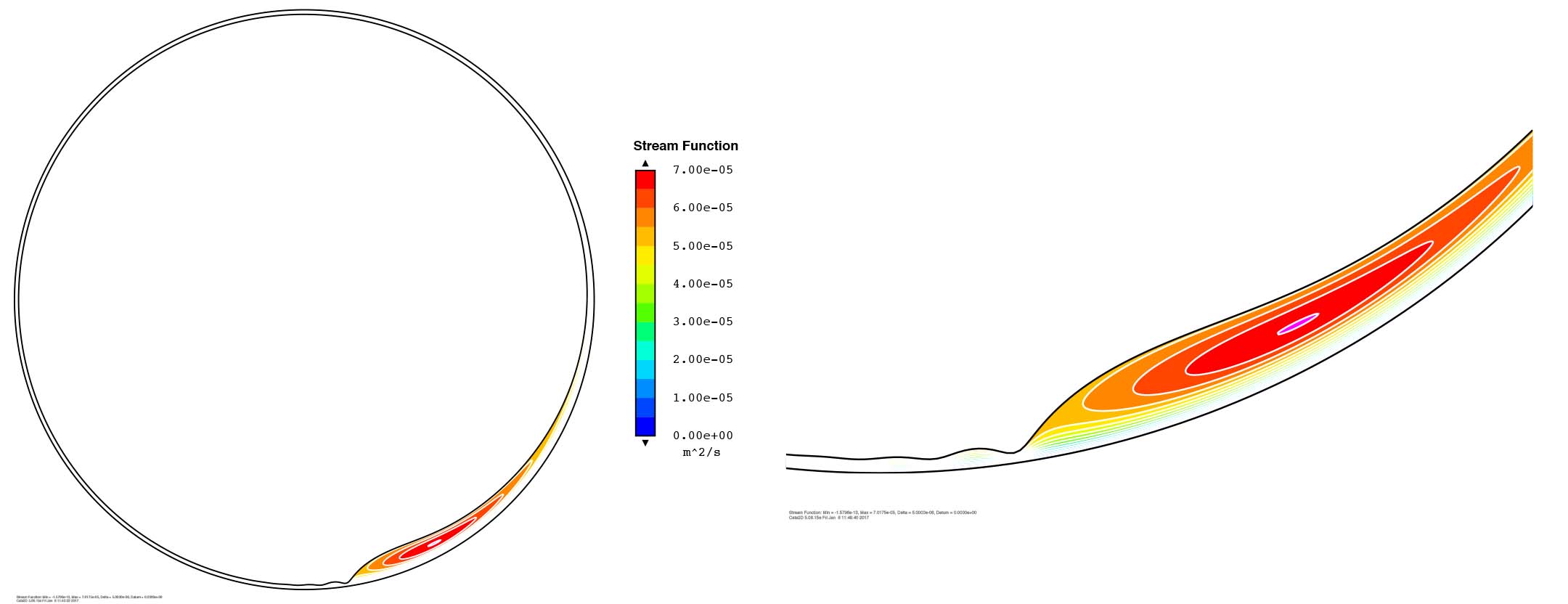

Awhile ago I showed results from a problem cooked up by Goodwin that has a fascinating internal flow structure. Fluid fills the space between an inner cylinder and a deeply corrugated outer wall. The inner cylinder rotates clockwise and the outer wall remains stationary. The flow topology, shown below, consists of fifteen co-rotating vortex centers, enclosed by seven nested saddle streamlines.

Roll over the image below to see what happens when an ink ball is released into this flow. The animation is 55 seconds long, so give it time to reveal the pattern.

After particles have spread out for awhile, some areas internal to the flow remain devoid of them. Eventually all fifteen vortex centers are surrounded by particles, making them visible. It's like secret writing. Warm the paper over a flame and watch the hidden message appear.

Now watch this Yin-Yang ink ball hit the cylinder. Fun!

I think I'm going to like ink balls.

Andrew's no-bullshit CFD manifesto

Recently I stumbled across a few manifestos on scientific computing written by academic researchers. I think these manifestos are misguided and smack of provincialism. Later on, I will share some thoughts on why. For the time being, I will offer up my own manifesto, thrown together just now with a little help from Goodwin, even though I think manifestos are stupid:

—I will use numerical algorithms appropriate to each task and I will strive to understand these algorithms even when they originate with others.

—I will validate my code vigorously against known solutions, and I will subject all my solutions to rigorous tests of conservation and adherence to prescribed boundary conditions.

—I will ensure that solutions are reliably converged in their spatial and temporal discretization, without resorting to methods having excessive dissipation, artificial diffusion, or ad hoc stabilization.

—I will show accurate and informative visual representations of my solutions that are true to the underlying data.

—I will maintain a repository explaining my methods and algorithms in sufficient detail for an expert to reproduce the work in a similar fashion.

—I will learn my craft and maintain my skills by direct and frequent engagement with programming and simulation.

That's it. Everything else about reproducibility declarations, version control, github, and all that, is just bullshit. Now take a look around my web site to see the manifesto in action.

Ride my see-saw

A preview of my latest work, visualizing the Kármán vortex street. The first animation shows vorticity with particle streaklines (see a larger version here), and the second shows separation streamlines, at Re = 140.

I am solving the problem to great accuracy using over 550,000 unknowns for Reynolds numbers up to 300, which approximately covers the regime in which vortex shedding is periodically steady.

What a wonderful world

This extravagant cake tastes every bit as good as it looks, maybe even better. All of it—the almond brittle, the chocolate covered caramels, the glazed cake balls, the nuts in syrup—was made from scratch by its talented creators. Inside are layers of chocolate cake, chocolate mousse, spice cake, mascarpone filling, and more chocolate cake. Everything pulls together. It's as though Cook's Illustrated, America's Test Kitchen, and The Great British Bake Off joined forces to create this marvel.

There is a lot of engineering going on here. Structural issues are important, of course, but material selection and handling are critical too. I was forbidden to watch its final assembly so I don't fully understand how the chocolate cladding was applied. I do know that critical steps were carried out at chocolate temperatures of 94 F and 88 F, and also that the chemical diagrams (acetaldehyde can be seen in the photo on the right) were added by transferring white chocolate from acetate paper to the dark chocolate.

A dinner was served preceding the presentation of the cake on the occasion of my half birthday. The Goodwins attended. I showed off some recent artworks created by Cats2D.

Visit my research topics page

The "Developments" page you are now reading, which is essentially my blog, has grown enormously long. It chronicles my work of the past four years without any attempt to organize it. To make it easier to use my research, I have collated related material from this page and elsewhere on the web site into articles that are found at my research topics page. Some articles emphasize crystal growth. Others emphasize visualization or numerics. I've grouped them together in a way that makes sense to me. Here are some of the articles.:

Submerged insulator/Rotating baffle vertical Bridgman method (SIVB/RBVB)

Unstable thermal convection in vertical Bridgman growth

Computing mass transport in liquids

I will add new articles to the research topics page over time. I will also continue to blog here on the developments page, in its usual stream of consciousness style.

Mondrian with a twist

A physics-based calculation was used to make this picture.

The three bears

I have put together a short discussion of a situation I have encountered several times when time integrating a system under batch operation. Most recently I encountered it when studying unstable thermal convection in vertical Bridgman growth. Previously I saw similar behavior studying detached vertical Bridgman growth (DVB) and temperature gradient zone melting (TGZM). In DVB the capillary meniscus bridging the crystal to the ampoule wall becomes unstable. In TGZM the shape of the solid-liquid interface becomes unstable. These systems have in common a slow time scale for batch operation, and a fast time scale for the local instability.

The wide separation of these time scales is problematic. In each of these cases it is possible to outrun the instability using a time step small enough to capture the batch dynamics accurately, but too large to resolve the faster time scale of the instability. It is quite easy to miss the instability altogether, even when using tight error control during integration. Nonlinear analysis is endlessly surprising, which is both rewarding and frustrating. For these kinds of problems it can be a dangerous walk in the woods.

Gilding the lily

Flow visualization is often facilitated by adding light reflecting particles to a liquid, such as aluminum dust or tiny glass beads, or by injecting dye into a liquid. Many beautiful photographs obtained this way have been published in the science literature. In 1982 Milton Van Dyke published An Album of Fluid Motion, a uniquely interesting collection of several hundred photographs contributed by experimentalists from around the world.

If someone were to follow in Van Dyke's footsteps and collect images for a new album of fluid motion based on computer-generated images of theoretical flow calculations, I doubt that nearly so many worthy images could be assembled. It's a shame given the enormous aesthetic potential of the subject matter.

Computation XI with Red, Blue and Yellow

See some of my other works based on the paintings of Piet Mondrian.

Vetrate di Chiesa

Metis partitioning of a mesh for nested dissection of the Jacobian matrix in Cats2D:

Preaching from the pulpit again, here are my thoughts on how to rank the following activities in terms of their importance to CFD:

Numerical methods > Algorithms > Serial optimization > Parallelization

Consider Gaussian elimination. Factorizing a dense matrix requires O(N3) operations. Tricks can be applied here and there to reduce the multiplier, but there are no obvious ways to reduce the exponent (there are some unobvious ways, but these are complicated and not necessarily useful). This scaling is brutal at large problem sizes. But we need Gaussian elimination, which affects everything else downstream.

To deal with this we build bigger, faster computers. When I started it was vector supercomputers. Later came parallel supercomputers. Now we have machines built from GPUs. Using any or all of these approaches to mitigate the pain of the N3 scaling of Gaussian elimination is futile. Increasing N by a factor of ten means increasing the operations by 1000. So parallelizing our problem across 1000 processors gains us a factor of ten in problem size if the parallelization is ideal, and a factor of five at best under actual conditions. It isn't much return for the effort.

Of course no one working in CFD factorizes a dense Jacobian. Sparse matrix methods are used instead. A simple band solver reduces the operation scaling to O(N), for example. A multifrontal solver does not scale so simply, but scales much better than O(N2) on the problems solved by Cats2D. Popular Jacobian-free alternatives also generally scale better than O(N2). By choosing an algorithm that takes advantage of special characteristics of the problem it is possible to reduce operations by a factor of N or more, where N is typically greater than 105 and often much larger than that. Reducing the exponent on N is where the big gains are to be found and this can only be done at the algorithm level.

This is a simple example, but I have encountered this theme many times. The first working version of an algorithm can be sped up by a factor of ten, and sometimes much, much more. I once rewrote someone else's "parallel" code as a serial code. When I was done my serial version was 10,000 times faster than the parallel version. There is no way hardware improvements can compensate for a poorly chosen or badly implemented algorithm. Far too often I've heard parallel computing offered as the solution to what ails CFD. It's totally misguided.

Now let's look more closely at the progressive improvements Goodwin made to his solver these past few years. His starting point, what he calls "Basic Frontal Solver" in the graph, was written in 1992. Before discussing the performance gains in this graph, I want to emphasize that this "basic" solver is not slow. It has many useful optimizations. In this early form it was already faster than some widely used frontal solvers of its day, including the one we were using in Scriven's group.

Still back in 1992 Goodwin added two major improvements, static condensation ("SC") and simultaneous elimination of equations ("Four Pivots") by multirank outer products using BLAS functions. These changes tripled the speed of the solver, making it much faster than its competitors of the day. Note that these improvements are entirely algorithmic and serial in nature, without multithreading or other parallelism. For the next 25 years we sailed along with this powerful tool, which, remarkably, remains competitive with modern versions of UMFPACK and SuperLU.

At the end of 2014 Goodwin climbed back aboard and increased solver speed by a factor of six using nested dissection to carry out the assembly and elimination of the equations. Again this was done by improving the algorithm, rather than by parallelizing it. Using multithreading with BLAS functions to eliminate many equations simultaneously contributed another factor of three, a worthy gain, but small in comparison to the cumulative speedup of the solver by serial algorithmic improvements, which is a factor of twenty compared to the basic solver.

I've simplified things a bit because these speedups are specific to the problem used to benchmark the code. In particular they fail to consider the scaling of factorization time with problem size. This next plot shows that Goodwin's improvements have changed the scaling approximately from O(N1.5) to O(N1.2), primarily attributable to nested dissection. The payoff grows with problem size, the benefit of which can hardly be overstated. Not to be overlooked, he also reduced the memory requirement by a factor of ten, allowing much larger problems to fit into memory.

One million unknowns in less than ten seconds on a rather pathetic 2013 MacBook Air (two cores, 1.3 GHz, 4 Gb memory). This is just good programming. Big shots at supercomputer centers think they've hit the ball out of the park if they can get a factor of sixty speedup by parallelizing their code and running it on 256 processors. It's the Dunning-Kruger effect on steroids.

Parallelizing a poorly optimized algorithm is a waste of time. Optimizing a badly conceived algorithm is a waste of time. Applying a well conceived algorithm to an inappropriate numerical method is a waste of time. Failure to understand these things has led to a colossal waste of computing and human resources.

Computation with Red, Blue and Yellow

Untitled. First in a series based on the paintings of Piet Mondrian.

Sweet dreams and flying machines

An excerpt from my first publication ever, written with Stan Middleman. It was mostly his paper, but I ran some simulations using an early version of Fidap on a Cray X-MP at the brand new San Diego Supercomputer Center back in 1985. Look at those ugly streamline contours.

This was the last time I ever used a commercial CFD code. I don't miss it. Scroll down to my post "Eating the seed corn" to learn more about what has happened to CFD since then.

Nobody does it better

Goodwin makes art, too. Out of respect for Mondrian, he restricts himself to secondary colors.

These images are taken from his slide presentation on the frontal solver. I cried when I skimmed it, forever grateful that I didn't have to sit through the talk itself. I'm starting to worry about my dependence on his solver, however, and I'm wondering if it would be possible to have him cryogenically preserved in case I outlive him.

Time for a fika break

Way down this page is a post titled "Lost in the hours" that compares a standard convective-diffusion model to a particle convection model computed by pathline integration. By massively seeding the flow with non-interacting particles we are able to represent mass transport in the singular limit of zero diffusion. I explore this approach here on the problem I studied in my recent post "Drifting petals" on the mixing of two compositional layers of a liquid by motion of a plunger in a cylinder approximately 15 ml in volume.

Let's start by looking at some still frames from first downstroke of the plunger. On the left is a frame from the convective-diffusion model. In the middle is a frame from the particle convection model. Red and blue particles turn into an swarming mess of purple in these visualizations, so on the right I have tried the Swedish flag colors for better contrast. I think I prefer it for the particle visualizations.

The agreement is remarkable. The particle convection model reproduces many fine details of the convective-diffusion model. This lends faith in both models, which are drastically different from one another, both in their mathematical formulations and in their algorithmic implementations. There are some issues with this comparison that are not obvious, but I will defer their discussion to the end of this post.

Mouse over the images below to see more broadly how the two models compare. After two strokes of the plunger the unmixed length scale has been reduced to the point that diffusion becomes important and the models substantially deviate from one another. In the early stages, however, when most of the mixing occurs, the particle convection model gives a very informative view of mass transport.

See both animations run simultaneously in a separate window (this might take a few moments to load).

Diffusion has largely homogenized the two layers after five piston strokes (below left). Without diffusion the particles are fairly well mixed, but not perfectly so (below right).

It should be noted that the diffusion coefficient used here, D = 10-8 m2/s, is three to ten times larger than typical of small molecules. A smaller more realistic value is very expensive and difficult to simulate because of the sharp concentration gradients found throughout the domain. These gradients place tight restrictions on time step and element size. In Cats2D all equations are solved simultaneously on the same mesh (no sub-gridding), so these restrictions carry over to the flow equations.

If conditions are such that a particle convection model can be used to study convective mixing, it is necessary to solve only the flow equations. A factor of three reduction in CPU time is obtained by eliminating the species transport equation and its restrictions. There are fewer unknowns, a larger time step can be used, and the Jacobian is better conditioned which translates to a faster elimination by the frontal solver. The added effort needed to integrate the particle pathlines is modest. I was able to reduce the total number of elements by a factor of four without compromising the solution, which gained an additional factor of five speedup. Overall the CPU time needed to simulate one period of plunger motion was reduced from 3 hours to 12 minutes with these changes.

Now about those issues I alluded to earlier. The pathline integrations shown here have been seeded with approximately 20,000 fictitious particles uniformly spaced in the liquid. The particles represent mathematical points, but we must draw dots else we cannot see them. These dots will overlap in the drawing where two particles have approached close to one another. Consider what happens when uniformly spaced particles are subjected to an extensional flow. Spacing between the particles stretches in one direction and compresses in the other direction.

Empty space is created in the drawing when the dots overlap. The total areas of dots and empty space are not conserved, something I brought up earlier in the "Paint the sky with stars" entry half way down this page. This explains in part the appearance of white space in the particle visualizations when none was apparent in the initial condition. This is particularly obvious underneath the plunger at the start of the simulation where the flow is strongly extensional along the center axis.

However, there is more going on here than meets the eye. The equations represent conservation in a cylindrical geometry, but are visualized in a planar cross-section on the page. Areas near the center axis represent comparatively little volume. Quite a bit of plunger has been removed at the top of the stroke in terms of its planar cross-section, but most of it comes from the narrow shaft, so the volume removed is not so great as it appears. The liquid level falls only slightly to compensate for this small volume, far less than the area created underneath the plunger in the drawing.

As a consequence the total area of the liquid displayed in the drawing is larger at the top of the stroke than at the bottom, even though its volume remains constant in the problem formulation. For this reason alone empty space will increase when the plunger is withdrawn. But there is another important implication regarding the particles themselves. If we regard them as objects in the cylindrical geometry, they must represent toroids rather than spheres. To maintain a fixed volume, the cross-sectional area in the plane must increase when a particle moves towards the center axis. The concept is illustrated below using bagels.

Obviously we aren't doing this with our dots of constant size. When particles rush under the plunger as it is withdrawn they fail to cover all the new area created there because they don't grow in size to satisfy conservation. This explains the growth and decline of empty space along the center axis as the plunger is raised up and down in the visualizations.

This isn't a problem per se, we just need to be aware of it and avoid drawing unwarranted conclusions about conservation of mass from these artifacts of the visualization. The only thing conserved is the number of particles, which have no volume. Their purpose is to provide a pointwise representation of the concentration field and they do so accurately at modest computational effort.

Working on a mystery

These short movies are from my research in the late 1990s. They show ACRT (accelerated crucible rotation technique) applied to two different vertical Bridgman systems.

Why are they so different? The system on the right experiences hardly any mixing at all, even though its rotation rate is three times larger than the system on the left (30 rpm versus 10 rpm). But it is also much smaller (1.5 cm versus 10 cm inner diameter) and its flow is much less inertial. These results are reminiscent of the animations shown in the "Drifting petals" entry below where inertia, or lack of it, has a big impact on the nature and extent of mixing.

I will be revisiting these problems soon for an in-depth analysis that only Cats2D can deliver.

Drifting petals

I have been working on a series of segregation studies in vertical Bridgman growth and some of its variants. One area of interest is the zone leveling effect of a submerged baffle. Zone leveling is a term sometimes applied to processes that tend to redistribute chemical composition uniformly in the growth direction of the crystal. Diffusion-limited growth has a zone leveling effect if convective mixing can be avoided. A submerged baffle isolates a small confined area near the growth interface from convection in the bulk, so we expect some zone leveling to occur compared to a conventional VB system. This is indeed the case. More on this later.

Modeling segregation in melt crystal growth is difficult. Almost no one can do it well. Mass transfer is inherently time-dependent. Diffusion in liquids is always slow, so the equations are stiff in time and space, placing strict demands on element size and time step size. Failure to observe these demands often ends in numerical instability.

There are other challenges. In a moving boundary problem the domain itself is in motion, which raises important issues in how the differential equations and their discretization are cast. Cats2D uses an arbitrary Lagrangian-Eulerian (ALE) form of the equations that conserves mass and momentum accurately. Conservation boundary conditions also must be formulated carefully. Gross failure to conserve mass is likely to occur if these things are not done precisely.

In the course of this work I have run some tests on mass transport in an isothermal system in which flow is generated by a pumping motion of the baffle. The surface of the liquid rises and falls as the baffle is raised and lowered. The mesh elements expand and contract as well, so the computational domain is in motion relative to the laboratory reference frame. Many things can go wrong in a simulation like this, making it an excellent test of the code. Primarily I ran these tests because I am obsessive about validating the code, but the results are sufficiently interesting to show in their own right.

Below I show mixing of two layers of liquid initially having different concentrations of a fast diffusing molecule. The inner diameter is 2 cm and the total amount of liquid is 13.2 milliliters. The viscosity is 0.1 Pa-s, approximately that of a vegetable oil at room temperature. The diffusion coefficient is 10-8 m2/s, two to three times faster than typical for small molecules. I used a high value to soften the numerics a bit. Even then the Peclet number equals 10000, indicating that mass transport is strongly convected under these conditions. In one case I've made the layers equal in volume, in the other case I've restricted the lower layer to the region under the baffle. The rate of motion in the animations is depicted in real time.

Mixing seems unusually slow. The Reynolds number is unity, near the Stokes limit at which flow is reversible, so fluid tends to return to a place near where it started when the baffle returns to its initial position. The interface between areas of high and low concentration is stretched by the flow, which enhances mass transfer, but diffusion across this boundary is slow and there are no large scale motions to carry mass across it. The fluid under the baffle has hardly been mixed after five up and down strokes of the baffle.

In the next results I have used the viscosity of water, 0.001 Pa-s, which increases the Reynolds number to 100. The added inertia makes the flow highly irreversible. Wakes churn around the baffle with a salutary effect on mixing. The improvement is dramatic. I've used the same diffusion coefficient as before, so the improvement is entirely attributable to convective mixing. The boundary between areas of high and low concentration stretches rapidly and spirals around itself, quickly reducing the mixing length to the diffusion length. Two strokes of the baffle are enough to mix the layers.

Conservation of mass is very accurate with total mass loss or gain less than 0.1% over the entire time integration. To achieve this I used a fine discretization with 332,391 unknowns. Variable time stepping with error control required approximately 1000 time steps per period at this Reynolds number. The wall clock time per time step on my modest laptop computer was about 10 seconds, so these animations took about 15 hours each to compute and draw the frames.

These computations allow very little compromise in numerics. A fine grid is required throughout the entire domain to capture the many sharp internal layers created by the swirling flow. Very small time steps and a high quality second-order time integrator are needed to conserve mass accurately (BDF2 was used in these computations). Numerical instability is difficult to avoid unless active time step error control is used. A robust linear equation solver is needed, and you'll be waiting around for a long time if it isn't very fast.

In a coming post I will discuss an alternative strategy for computing mixing at very high Peclet numbers that is more stable and less expensive than the convective-diffusion approach used here. Aesthetically pleasing images can be expected, as usual.

Things that really bug me

Recently I came across the following sentence in an article at a major news site: "Powerful legs help the cassowary run up to 31 miles per hour through the dense forest underbrush."

I think we can safely assume that no one has accumulated data of sufficient quantity and accuracy to confidently assert that cassowaries reach a top speed of precisely 31, rather than say 30 or 32, miles per hour. The phrasing "up to" suggests that 31 is a ballpark figure. Then why the extra digit of precision? Is it significant?

Undoubtedly this number appeared in the original source as 50 kilometers per hour, which is a round number. I take this number to mean more than 40 and less than 60. This converts to a range of plus or minus six miles per hour. But American news sources generally convert metric quantities to our customary units, and report the result to a greater precision than implied by the original source.

I sense that this practice creates a cognitive bias in how we assess the reliability of a quantitative result. A rough estimate, perhaps of dubious reliability, is converted to a more precise value, suggesting that it was obtained by comparably precise measurement. In the case of the cassowary, the intent of the original source would have been more closely observed by rounding 31 to 30 miles per hour after the conversion.

Another such number is 3.9 inches, which comes up more often than you might think. What does that equal in metric? A very round 10 centimeters. I once watched a television documentary which claimed that satellites are capable of detecting a moving bulge on the surface of the ocean "as small as 3.9 inches," which might be used to detect submarines, for example. Expressing it this way conjures a hand held ruler where we can easily distinguish 3.7 inches (it's just noise) from 4.2 inches (it's a submarine). In this perspective, a signal of 6 inches sounds strong.

Suppose we say "as small as 0.01 meters" instead of 3.9 inches, and for good measure add that a satellite flies at an orbit of 105 meters or higher. A good engineer will note that the claimed precision of the measurement is 10-7, or one ten-millionth, of the distance from the satellite to the measurement, and will understand that this is a difficult measurement against a noisy background. From this perspective a signal of 0.02 meters with a precision of 0.01 meters does not sound nearly as convincing as a signal of 7.8 inches with a precision of 3.9 inches.

Stir it up

Mixing of a stratified liquid by a periodic pair of Lamb-Oseen vortexes. Mouse over image to make it go (it might take a few moments to load).

Periodic boundary conditions on the temperature field don't make much physical sense, but I was having a lazy day.

Jumping the shark

I just came across this line in an abstract from a recent meeting on crystal growth modeling:

Finally, we discuss plans to deploy of our model as a user-friendly software package for experimental practitioners to aid in growth of novel materials.

It is a vaporware announcement, and I am its target. How awesome is that?

In my experience—and I dare say it is considerable—developing a "user-friendly software package" in physics-based computing is difficult, particularly for nonlinear multiphysics problems with free boundaries. I'm not even sure it is possible, the wonderful Cats2D notwithstanding.

Why would anyone who lacks a strong background in software development believe they could accomplish this using inexperienced, itinerant labor? How about a person who has never even used, much less written, such software?

Cats2D art machine

Instructions: Choose one flow type, one scalar field type, and one particle path type. Go from there.

To make these I chose Hill's spherical vortex, principal angle of extension, and streaklines.

Tee shirts. Mouse pads. Maybe even a small arts grant. The possibilities boggle the imagination.

Cats2D onsite training

Rollin' and Tumblin'

A small but non-infinitesimal volume of fluid can experience shear and extensional deformation in addition to linear translation and rigid rotation. A useful discussion is found in Sec. 1.3.7 of these notes on kinematic decomposition of motion, and here too, particularly Sec. 3.6 on principal strain axes. Viewed this way, ordinary Couette flow can be characterized as a superposition of linear translation, rigid rotation, and pure extension.

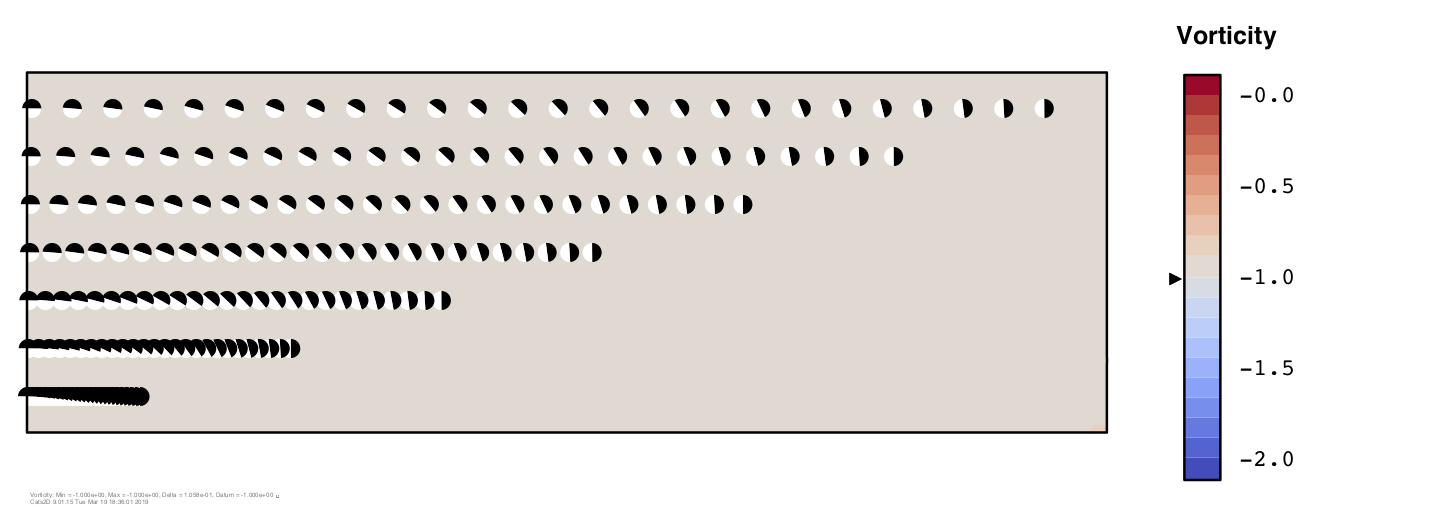

Below I show streaklines of particles in Couette flow. Vorticity is constant and we can see that particles released at the same time have all rotated the same amount regardless of velocity or distance traveled. The rotation rate is one half the vorticity in radians per time unit. After Pi time units the particles furthest to the right have rotated a quarter turn (Pi/2).

Shown next is Poiseuille flow in planar coordinates, which has a linear distribution of vorticity. Particles rotate clockwise in the lower half of the channel where vorticity is negative, and counter-clockwise in the upper half where vorticity is positive. Rotation is fastest near the walls and drops to zero at the center line, plainly evident from the absence of particle rotation there.

These plots do a good job illustrating the translating, rolling motion of particles in simple shear flow. But a non-infinitesimal volume of fluid also experiences deformation in shear flow because one side of the particle is moving faster than the other. Particles are stretched at a 45 degree angle to the streamlines, illustrated in the sketch below. This special direction is a principal axis of stress, an axis along which flow is purely extensional.

In mathematical terms, the principal directions of a flow are given by the eigenvectors of the deviatoric stress tensor. For Newtonian and other isotropic fluids these axes are orthogonal to one another. The eigenvalues give the rate of expansion or compression along the principal axes. The trace of the tensor is zero for incompressible flow in Cartesian coordinates; hence, in planar two-dimensional problems, compression in one principal direction is always equal in magnitude to extension in the other direction. The trace is not zero in cylindrical coordinates because flow in the radial direction expands at a rate -2V/R because the coordinate system expands in that direction.

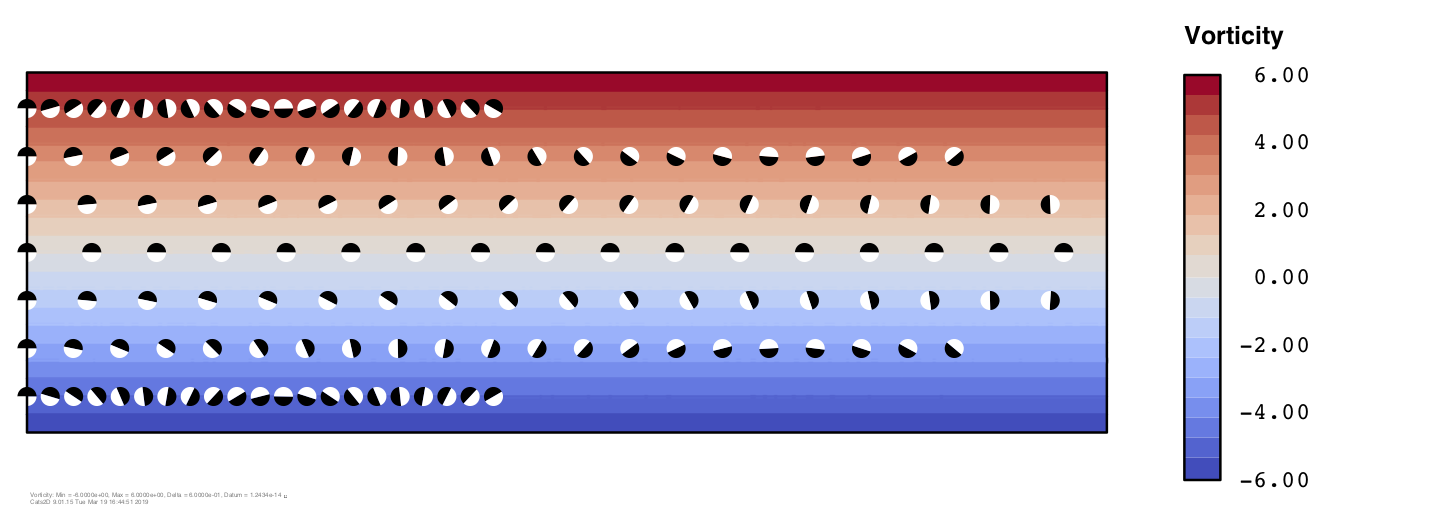

I have added a new vector plot type to Cats2D that shows the principal axis of extension in a flow scaled by the extension rate. Here is what it looks like for Poiseuille flow, where we see the same 45 degree angle everywhere, largest near the wall and zero at the center line.

Next I show the principal axis of extension in Hill's spherical vortex plotted on top of stream function contours. Along the vertical center line we can see that the principal axis forms a 45 degree angle with the streamlines, indicating shear flow. Near the horizontal center line, the angle is either close to zero or close to 90 degrees, indicating extension or compression. The largest rate of extension happens at the rear of the vortex (the left side), and the largest rate of compression occurs at the front of the vortex (the right side).

Elsewhere in the vortex the angle formed between the direction of flow and the principal axis of extension is intermediate between extensional and shear flow. I have added a new contour plot type to Cats2D that shows this quantity, the principal angle. In pure shear flow this angle equals 45 degrees, in pure extension it equals zero degrees, and in pure compression it equals 90 degrees. Shown below is the principal angle in Hill's spherical vortex, which has large areas of extension (blue) and compression (red) in the direction of flow. The angle is discontinuous at the center of the sphere, and it is singular at the vortex center, features which are awkward to depict using contours, so I've omitted contours in the upper half of the sphere. I think the continuous color image is more attractive without them.

In this animation we can see the line of particles extending in the blue regions and compressing in the red regions as it circles the sphere.

I am having a lot of fun playing around with these new types of plots, and I am rediscovering basic concepts in fluid dynamics at the same time. Cats2D would make a great teaching tool.

Whine and cheese

Let's talk about things that annoy programmers. Three in particular stand out: programming is way harder than it looks, the boss doesn't know how to code, and unclear project specifications are a nightmare. Obviously these are closely related phenomena.

Programming isn't a binary skill, but I sense that many people perceive it that way. A programmer seems like a plumber or a nurse or maybe even a public notary. Either you are one, or you aren't one. At some point knowledge of plumbing saturates and there isn't much in the world of plumbing to learn anymore. Programming ability doesn't saturate this way. It takes years to grasp the basics, and for those who aspire to mastery, the learning never stops. The range of ability, experience, and talent among self-identified programmers is enormous.

Programming is slow, laborious work that demands constant deliberation and care. Bad things happen when it is rushed. Not everything needs to be perfect, but cutting corners in the wrong places will cripple a code. Acquiring the skills to do this well in a large complicated code takes much longer than many people imagine. It is often difficult to successfully extend the capabilities of a large code, and it is always easy to break it trying.

I once knew a professor who boasted his student was going to write a code to solve the Navier-Stokes equations using the finite element method, and do this in two weeks according to a time line they had written down together. An existing linear equation solver would be used, but otherwise the code would be written from scratch in Fortran 77 (this was in the early 1990s).

The absurdity of this cannot be overstated. Even Goodwin could not do this in fewer than three months, and he's a freak. Six months would be fast for a smart, hard working graduate student to do it. Being asked to accomplish something in two weeks when it really takes six months is confusing and demoralizing.

Unfortunately the situation hasn't gotten any better in the intervening quarter century.

March of the Penguins

Lisa is probably the only person ever to walk out during a showing of March of the Penguins. She didn't like the way the penguins were treated. If you've seen this movie you might remember how the penguins gang together in a large circular mass to protect themselves against the wind, and how they cooperatively circulate to keep moving cold penguins off the exposed perimeter.

In Hill's spherical vortex, vorticity varies linearly from

zero at the axial centerline to a maximum of 5 at the poles of

the sphere, causing the penguins particles to spin as

they circulate. Mouse over the image below to see the effect

of vorticity as they march around my imaginary kingdom. The

particles spin through 3/4 of a turn every time they orbit the

vortex center, regardless of their initial position.

These visualizations show linear translation and rigid rotation of small particles. A small but non-infinitesimal volume of fluid can also experience shear and extensional deformation as well. These characteristics of a flow can have a strong impact on polymer solutions and other liquids with strain-dependent properties. My next entry on principal axes of stress in a flow will delve into this issue more deeply.

Hill's spherical vortex

There is a wonderfully simple solution representing Stokes flow recirculating inside a sphere reported in 1884 by Micaiah John Muller Hill. In its stationary form, axial velocity (left to right in the picture below) is given by U = 1 - Z2 - 2 R2, and radial velocity (upward in the picture) is given by V = ZR. Streamlines of the exact solution are shown in the top half of the sphere, and streamlines computed by the Cats2D are shown in the bottom half. They are indistinguishable.

The velocity components are shown next, U in the top half and V in the bottom half of the sphere. These are computed by the code and they look identical to the exact solution given by the formulas above. The error between exact and computed solutions is 0.02% on this discretization of 71,364 equations.

This solution is particularly useful for testing derived quantities such as vorticity, pressure, and shear stress. The computation of these quantities is a complicated task in the code, and errors might be introduced in the post-processor even though the code computes the solution correctly. The analytical form of the exact solution is so simple it is possible to derive these quantities by inspection. Pressure equals -10Z, for example, and vorticity equals 5R. Computed values of these quantities are shown below and they match the exact solutions perfectly.

Similar plots can be made for normal and shear stresses, all components of which are linear in either R or Z.

Next I show principal stresses computed by the code. Extension is shown in the bottom half of the sphere and compression is shown in the top half. These match perfectly their analytical forms derived from the exact solution, which are nonlinear in R and Z with simple closed forms as roots of a quadratic equation.

These might appear to be weak tests with the notion that errors are masked by the apparently simple nature of the solution. But the equations were solved on the mesh shown below, the elements of which do not align with the coordinate directions. The equations are transformed to a local coordinate system on each element, and solution variables are interpolated in that system. Pressures and velocity gradients are discontinuous at element boundaries and must be smoothed by a least squares projection onto a continuous basis set before contouring. A myriad of things could go wrong that would show up very clearly in these plots.

We never tire of testing at Cats2D and I was gratified to verify all manner of derived variables in the post-processor against this exact solution. But Hill's spherical vortex is also great problem for working through basic concepts in fluid dynamics. Later I will post some instructive animations that illustrate the effect of vorticity in this flow, and I will also show some new vector plots of principal directions, or axes, of extension and compression that I've developed recently.

Goodwin introduced me to this solution, the story of which is funny enough to share. First let me say that he has been acting rather agitated about the web site lately. New entries aren't posted often enough, the content is unoriginal, there is too much social commentary, and the ideas are stale and thin. Plus some unstated concerns about my state of mind. When I started writing this entry I realized it was going to be the second one in a row to mention him. Then it hit me: I had posted eleven consecutive entries without including his name once. I had mentioned my father, my childhood surfing buddy, and Bob Ross, but not him, despite his passing resemblance to Bob Ross. I hope this entry reassures him that he remains the star of my web site.

The humorous part is that he had to turn to me for help setting up the Cats2D input files to solve this problem. Though I don't show it here, there is also a flow computed outside the sphere, and he couldn't figure out how to specify the boundary conditions at the surface of the sphere to obtain a convergent solution. I immediately recognized that he was making exactly the same mistake I had warned against in my Just sayin' entry found down this page. This was an entry he had been particularly critical about, the irony of which cannot be overlooked. He said it was mundane, that it lacked eye candy, and for goodness' sake it didn't have a single clever turn of phrase (I swear that is exactly how he put it). So I sent him straight down the page to re-read that entry until he got things straight: know your methods.

Circling the drain

Goodwin. He's an awesome pest sometimes (lately he has taken to calling himself the QA/QC department), but once in awhile he comes up with a useful suggestion. This time it was animated particle rotation. Of course I had to do all the work.

Mouse over the images to see the action when a line of particles is released into the Lamb-Oseen vortex pair in a periodic domain. Particles rotate at one-half the vorticity of the flow. Below left shows the full periodic cell, below right shows a close-up in slow motion.

Ideas like this are why it is handy to keep him around.

Feed your head

Suppose you are an alien humanoid from the planet Ceti Alpha IV and have just arrived on planet Earth after a long voyage. Naturally you want to unwind before conquering the planet, so you'll need to determine which intoxicants are available. Your parents raised you to make good choices, so you'll be looking for something that has low potential for both dependence and accidental overdose. On your way to the White House to demand fealty of all Earthlings you check in with the District of Columbia Department of Health, which provides you with this "safety profile" (their wording) of commonly available terrestrial intoxicants:

It turns out that your physiology is remarkably similar to humans,* so this chart should be valid for you. The closer you get to the upper right hand corner of this plot, the hairier things get. It looks like you'll never escape the pit if you wander up there and start fooling around. But look at the lower left corner. That "LSD" stuff looks like you couldn't overdose on it if you tried, and it isn't habit forming, either. With a safety profile like that, LSD could become your everyday thing, and a big money maker back on the home planet, too. So you figure it will be cool to load up on some good acid before you hit the White House to make that fealty demand. But, for some reason, when you finally get around to the White House you forget why you came in the first place.

Obviously something is missing from this picture, since it fails to consider any issues beyond dependence and lethal dosage. We could add a third axis here labeled "consequential side effects" and I'm sure that LSD is pretty far out on this axis compared to caffeine, for example. The problem here, and it is a common one, is a failure to consider all the important criteria. Once this number exceeds two or three, we cannot easily portray them all on a flat sheet of paper. Moreover, we tend to focus on those criteria we can most easily quantify, rather than grappling with less tangible things that are nevertheless important. Every visual representation of a complex problem creates a cognitive bias in how we interpret the problem. We shouldn't settle for just one view of a situation. We should always ask what is missing from this picture.

* The reason behind this is explained in the 146th episode of Star Trek: The Next Generation, The Chase

The highways of my life

Morning

Afternoon

I enjoy a visit to the Scripps Pier Cam once in awhile.

The golden rule

Conserving mass is essential. My intuition and experience tell me it is far more important to conserve mass accurately than it is to conserve momentum when solving the Navier-Stokes equations. Mass conservation is the zeroth moment of the Boltzmann transport equation, whereas momentum conservation is the first moment. Compromising the zeroth moment of an approximation seems like a bad way to start.

Cats2D uses a nine-noded element with biquadratic velocity interpolation and linear discontinuous pressure interpolation (Q2/P-1). This is a magic element that satisfies the LBB stability condition, meaning it is free of spurious pressure modes. It always delivers a solution, which fits the Cats2D "use it and forget it" philosophy. Another advantage is that mass is conserved exactly in an integral sense over each element. This is a strong statement of mass conservation compared to other typical LBB stable elements which lack this property, such as the biquadratic-bilinear (Q2/P1) element.

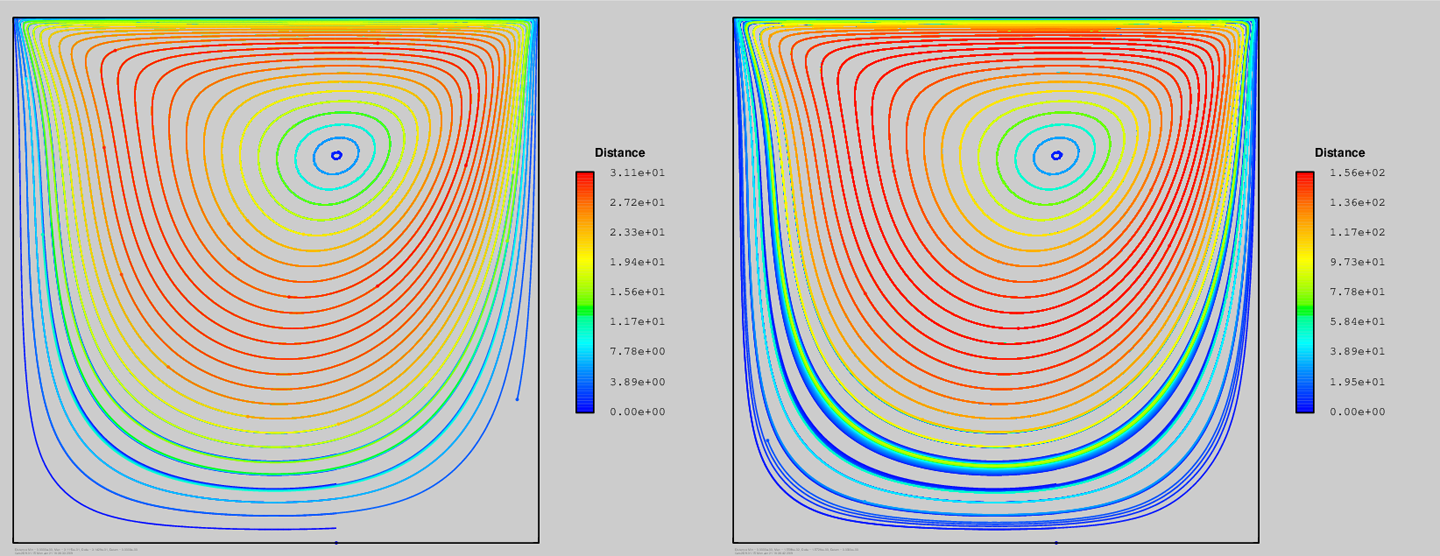

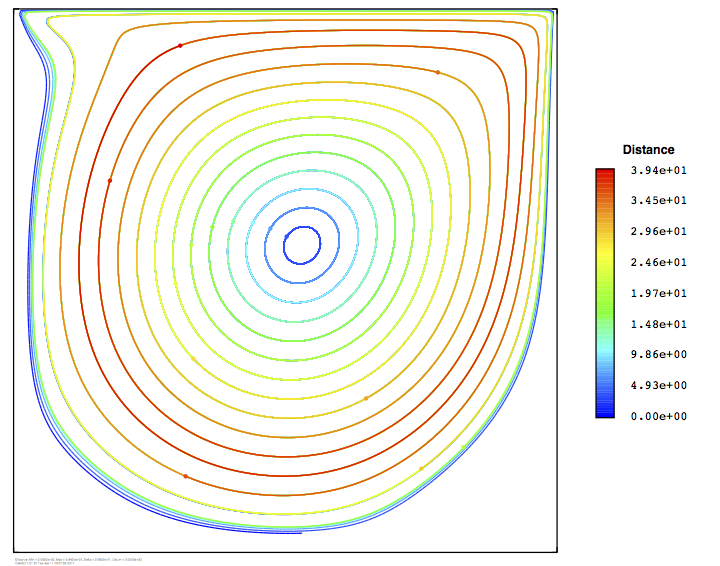

Very good pathlines can be produced using the biquadratic-linear discontinuous element. I show here pathlines for a lid-driven cavity flow at Re = 100 on a uniform mesh of 100x100 elements, after 1 time unit on the left and 5 time units on the right. After 5 time units some of the pathlines near the middle of the vortex have circled around more than 100 times without wandering at all. Some spreading has occurred that is most evident in the lower part of the cavity, particularly in one pathline that passes close to the corners of the lid where the velocity field cannot be accurately resolved. But performance is impressive overall.

The same test repeated with the biquadratic-bilinear element is shown below. Performance is very good in the middle of the cavity, but much worse in the lower part of the cavity. The bilinear pressure basis has three times fewer continuity constraints than the linear discontinuous basis, resulting in a less accurate pressure field and large local divergence in some parts of the mesh. In these results 1% of the elements have divergence > 0.001 and 0.1% have divergence > 1. I'm dissatisfied with its performance here, though I never use this element type anyway, simply because a continuous pressure basis is awkward to use in many of the problems that I solve.

This makes me wonder about stabilized finite element methods such as pressure-stabilized Petrov-Galerkin (PSPG) and Galerkin-Least Squares (GLS). Cats2D does not implement these methods currently so I cannot test them right now, but when I used them in the past I noticed they exhibited what seemed to me uncommonly large pointwise divergence within elements, often greater then 0.1. I conjecture this degrades pathline accuracy in these methods, but I need to carry out appropriate numerical tests to say for certain. If only there were more hours in the day.

Alizarin crimson

"Color in sky, Prussian blue, scarlet fleece changes hue, crimson ball sinks from view" — Donovan, Wear Your Love Like Heaven

I've always enjoyed this song with its exotically named colors, but I learned only recently from The Joy of Painting hosted by the legendary Bob Ross that Prussian blue and alizarin crimson are common oil paint colors.

Box of rain

Here is another visualization of the merger of a pair of Lamb-Oseen vortexes. Mouse over this image to animate particle streaklines, a new feature that I just added to Cats2D. Streaklines are identical to streamlines and pathlines in a steady flow, but differ whenever the flow is unsteady. A streakline is the locus of particles released at the same spatial point but at different times in a flow. It represents an experimental situation in which a flow is visualized by continuously generating small bubbles or releasing dye at fixed positions in a flow. In this visualization I have arranged their release along a horizontal line through the center of the vortexes.

I've also computed pathlines in which a single line of particles is released at the start and the paths they travel are traced. Here is how pathlines compare to streaklines after 0.001 seconds of vortex decay.

More comparisons of this kind are shown here along with an animation of the pathlines.

Allegro non troppo

Cats2D solves problems in two spatial dimensions, but we inhabit a world of three. Can a problem computed in two dimensions represent a real flow? It is an important question. A great body of work has been written on two-dimensional flows, far more than on three-dimensional flows. There is good reason to believe that many two-dimensional solutions do not represent real flows, even though they may sometimes share qualitative features with one. I restrict my attention here to laminar flows, because turbulent flows are always three-dimensional.

The nature of the two-dimensional approximation depends on whether we assume translational symmetry in planar coordinates, or rotational symmetry in cylindrical coordinates. The planar approximation assumes that the system is open and infinite in extent in the third dimension. Neither is true in practice, and real systems introduce end effects neglected by the planar approximation. In the cylindrical approximation the azimuthal direction closes on itself, so that systems of finite extent can exactly satisfy the approximation without end effects, provided the system geometry is rotationally symmetric. I will examine each of these situations in turn.

Let's start with a planar example, the two-dimensional lid-driven cavity. It's a great problem, but how well does it match the flow in a three-dimensional box with a moving surface? Not very well, it turns out, not even in a long box of square cross-section far from its ends. In a cavity of aspect ratio 3:1:1, illustrated below, laminar time dependent flow ensues at moderate Reynolds number around 825, and longitudinal rolls are observed in experiments for Reynolds above 1000. The flow is strongly three-dimensional everywhere above this transition, yet remains laminar below a transition to turbulence at much higher Reynolds number around 6000 (see Aidun, Triantafillopoulos, and Benson, 1991, Phys. Fluids A 3:2081–91 for experimental visualization of these phenomena).

We might think that flow at low Reynolds number is purely two-dimensional far from the ends of the box but it isn't. No-slip boundary conditions cause the flow to decelerate at the ends of the box. The flow is necessarily three-dimensional there. Viscous drag will affect flow all the way to the center symmetry plane of the cavity, though its influence may be weak there.

Let's examine more closely how this impacts the three dimensional form of the U and V equations at the center plane, where we assume that flow in the X-Y plane is symmetric with respect to Z, namely dU/dZ and dV/dZ = 0 at Z = 0. These conditions remove an inertial term from the left side of each equation, but a viscous term that is not present in the two-dimensional form remains on the right side of the equations. These terms represent the drag effect that the end walls exert at the center plane.

Now let's examine the W component of the momentum balance and assume that W = 0 everywhere on the center plane, which implies that all derivatives of W with respect to X and Y are also zero in the plane. This assumption eliminates most of the terms in the equation.

Invoking symmetry again, we let dP/dZ = 0 which leaves d2 W/dZ2 = 0 at Z = 0. Since W and its second derivative both go to zero at the center plane under the stated assumptions, W appears to be an odd function, namely W(Z) = -W(-Z), which is intuitively obvious. Most importantly, dW/dZ does not need to equal zero under the stated assumptions. The final inertial term on the left side of the equation vanishes because W = 0, irrespective of the value of dW/dZ. Therefore we do not recover the two-dimensional continuity equation at the center plane.

This means that flow is not divergence free in the plane, making it fundamentally different than the two-dimensional cavity flow. An important manifestation of the difference is instability of particles to lateral perturbations on parts of the center plane, which causes them to spiral away from it. To conserve mass, particles spiral towards other parts of the center plane in compensation, setting up a recirculation in the longitudinal direction. This flow is illustrated below by path lines and transverse velocity profiles computed at Reynolds number equal to 500 (from Yeckel and Derby, 1997, Parallel Computing)

The effect is pronounced at this Reynolds number, but is also present and tangible at much lower Reynolds number. Critically, any transverse flow at all will disrupt the flow topology observed in the purely two-dimensional cavity flow. The corner vortexes for which the lid-driven cavity is well known are no longer entirely closed. Particles recirculating through the ends of the cavity can move from the corner vortexes to the central flow and vice versa, rather than being perpetually trapped there. Better visualization and a deeper discussion of this effect can be found in Shankar and Deshpande, 2000, Annual Review of Fluid Mechanics.

The two-dimensional idealization totally fails to capture this important characteristic of the flow because it forbids lateral motion of fluid out of the X-Y plane. This restriction also allows unrealistically large pressure gradients to form in the X-Y plane. In the three-dimensional system freedom of lateral motion mitigates the build up of pressure, altering the size and shape of the lower vortexes and delaying the onset of the upper vortex to higher Reynolds number.

I have used this example to illustrate two common reasons the planar two-dimensional equations fail to capture the essence of a real flow in three dimensions. One is the presence of end effects, the other is an instability of the two-dimensional solutions to three-dimensional disturbances. But are these things always a problem? Not necessarily. Rotationally symmetric systems are free of end effects, because the third spatial dimension, the azimuthal coordinate, closes on itself. Solutions computed in cylindrical coordinates represent realizable three-dimensional flows of practical significance, unlike the more idealized planar approximation.

Furthermore, it is natural to express the azimuthal coordinate in trigonometric functions, making it simple to formulate the linearized stability response to arbitrary three-dimensional disturbances. This gives a useful bound on the validity of a solution in a rotationally symmetric system without needing to perform an expensive simulation in three spatial coordinates. Cats2D does not presently have such a feature, but I am contemplating adding it. Many of the physical systems I study are designed to approximate rotational symmetry, particularly in melt crystal growth. It is reassuring to know that solutions computed in cylindrical coordinates represent real three-dimensional flows. It would be even more reassuring to ascertain their stability to arbitrary three-dimensional disturbances. Let's call it reality-based computing.

Catherine wheel

Here is a sample image from my latest fun problem, the merger of a pair of Lamb-Oseen vortexes (see Brandt and Nomura, 2007, to learn more about this type of flow).

Watch this animation of a line of particles released into the merging vortexes.

Blah blah blah, Ginger

Time to start ranting about some of my pet peeves as a scientist. This one stems from a long running discussion with my father about U.S. drug policy, in the course of which he showed me this report published by an anti-drug think tank. The report presents a flowchart, shown here, described as a model of substance abuse. Don't get caught up studying it too deeply, though. There is less here than it seems: